Research Computing Teams #135, 3 Sept 2022

Long time reader Adam DeConinck wrote in answering a question about Firecracker, a light-weight VM which is used for hosting tools like (as in last issue) CI/CD pipelines:

I love Firecracker, but so far there’s no support for PCIe pass through (eg GPUs, NICs, etc). Which I find really unfortunate […] because research computing in general is making heavy use of such devices, wherever they get them. I’d love to see a micro-VM approach in HPC at some point. Or really anything that can provide a better multi-tenancy story for shared research computing facilities in an increasingly security-conscious world. And as some Kubernetes devs have reminded me lately, the kernel (and containerization) are not a security barrier. […] Selfishly, I just want a VM platform that's as easy to use in HPC as Singularity or Enroot.

This was new information to me - thanks!

The current top question on the polly list (which has been pretty quiet - cough cough) is on writing a job advertisement. This will probably take more than one issue to address. It’s a absolutely key question (and I’m going to write out “job advertisement” pretty consistently, because too often we forget that’s exactly what it is — a document whose purpose is to be advertise our job and attract potential candidates), but first I want to address the hiring wrong-way-round approach I see too often in our community (and, to be fair, in many many others).

A very common meta-mistake a lot of teams make when hiring is to consider it as a sequence of discrete, loosely related checkboxes, to be completed in the order that they’re needed. Write up a list of features we expect a new candidate to have; post it some places; recruit some candidates; figure out some interview questions; choose someone to give an offer to. Then once someone’s hired there’s a second series of checkboxes, which must be completed in order: onboarding; figuring out what they should work on; evaluating and managing their performance.

I call this a meta-mistake because this mental model then causes a bunch of mis-steps and needless hassle, for the manager, the team, and the candidates.

The truth is that everything described above is best worked on as a single coherent process. The process begins with a (funded) but still vague need on the team, and ends with a successful, integrated, new team member killing it after their first six months. Adopting that mental model and taking it seriously means front-loading some of the work, but enormously increases the chances of success. Not only are will you end with a team member who doing well, but you’ll have had a better candidate pool, and the non-hired candidates who might be (or know!) a good match for future opportunities, will think well of the professionalism of the process.

And like most processes, when planning for how it will unfold, it’s best to begin with the end in mind.

Let me begin with a bit of unsolicited advice for your own career development. When you’re next interviewing for a job, and it’s your turn to quiz the hiring manager, ask the following common question: “What does success look like in this job? What would make you say, after six months, ‘This was a good hire, this really worked out well’?” This is a common, almost banal, question, but one where the answer can be very helpful for you in evaluating the job. So is a lack of an answer. If they do not have a coherent and confident response, that’s a red flag. They do not have a plan to make sure a new hire succeeds in their job; they can’t, because they're not even sure what outcome they’re aiming for.

From the hiring team’s point of view, the whole purpose of any hiring process can be summed up by them being able to honestly say to themselves “This was a good hire, this really worked out well.” From a candidate’s point of view, the purpose is to end up in a job that can put their skeills to good use, and to succeed at a job they enjoy. A hiring team able to clearly articulate that successful end state makes both sides more likely to get what they want.

So it’s disappointing how many times I’ve lobbed this softball of a question at hiring teams (as an advisor or as a job candidate) and watched people scramble and duck for cover as they search for something to say in response.

Lou Adler relentlessly popularized the idea of “performance-based hiring,” focussing first on what the successful candidate will actually do several months in (“performance profiles”) rather than looking for people who have a laundry list of features the hiring manager thinks they should have. His book has some really strong sections. But it’s not a new idea. There have always been managers who thought of hiring, and communicated their open positions, using precisely this approach.

There are some completely general advantages of starting planning of hiring process from the final state, success three-to-six months post hire:

- You have a pretty good sense of what success looks like on your team; that end point is a much more solid place to start from than some hypotheses of what features a successful job candidate might walk in with, when in fact there’s probably several combinations of input skills someone could have that would work out just fine,

- You are much less likely to needlessly exclude excellent candidates simply because they didn’t follow a career path that generated the laundry list of features (advanced degree, five years with technology X) that “typical” hires might have acquired along the way,

- You’ll find it easier to attract good candidates by including the successful end state in your job advertisements, because they’ll be able to see themselves succeeding in the role,

- You’ll attract fewer poor matches, who wouldn’t enjoy the work and its challenges, for the same reason,

- You’ll find later stages of planning (meaning earlier stages of the resulting hiring process) much easier - drafting onboarding plans, teaching resources, revaluation criteria, candidate packets, recruiting approaches, and job advertisements.

There are particular advantages for research computing and data teams, too. Say you’re an RCD team looking for a new e.g. data scientist or research computing facilitator or software developer. Often these teams have two or more quite different areas of domain expertise — say quantum science or digital humanities — that they want to grow in, but they’re not fussed by which specialty they hire for first (zero points for guessing whether I think that ambivalence is a good idea, but it’s a situation some teams are stuck with). Clearly describing a successful research facilitator on your team, supporting research in their specialty, is a much more fruitful starting place than trying to come up with a Venn diagram of previous experience and skills spanned by the two areas. Especially when this might be your first digital humanities hire and the team would struggle to come up with a skill list to start with!

With a clear view of what success looks like after four months, the team can start planning a road to get there for a new hire: what would be expected after two months; after one month; after two weeks; after one week. Hashing out and creating some agreement about that successful end state with a team is a very useful discussion to have.

These should be quite detailed, and when you agree on them they can be incorporated into a job advertisement. Here’s a couple I’ve written for two different sorts of jobs (note the longer timescale in the leadership position):

Delivery Manager:

A successful hire in this role would, by 3 months:

- Have broad understanding (even if still shallow in some areas) of the project and its ecosystem, and knows the people involved quite well

- Have taken leadership in running technical planning meetings for short-term planning (sprints, etc)

- Be managing the backlog, and following up on ticket status, with enough diligence that most team members would agree that the status of current project work is significantly clearer

By 6 months:

- Have solid high-level understanding of all technical components the project, and of the project’s role in its ecosystem

- Have taken leadership of longer-term technical planning meetings, and of the writing planning documents for the team and the PIs

- Have started being increasingly present and visible in meetings with external stakeholders

- Have started to write and contribute to internal and external project technical documentation

- Be continuing to make improvements in the clarity of the work in-flight, and the coherence with which new work is being issued and communicated

- Be starting to make recommendations for substantial changes to project management structure and rituals

And by 12 months:

- Be directly communicating overall project status and direction to PIs and external partners

- Be a trusted voice in discussions involving choosing new directions

- Be a trusted voice in discussions regarding hiring

Network operations/observability Senior IC:

In your first month you will:

- Meet with the teams you’ll be working with, and start building relationships:

- Our team

- Institutional IT Security

- Key researchers

- Our collaborators at [other center]

- And learn about our systems: this includes:

- [HPC clusters]

- [data management system], and [joint centre run by other team}

In your second month, you’ll:

- Start working with [hiring manager] and key team members to create an overall plan and some initial steps for the operational visibility transformations we want to see

- Supported by [hiring manager], start discussing these first steps with individuals on our and collaborating teams, and get feedback

- Incorporate feedback into our plans

- Identify junior team members for technical mentorship

In your third month, you’ll:

- Supported by [hiring manager], present the overall plan and first quarter’s worth of steps to key stakeholders

- Begin implementing the first stages of the plans, helping junior team members learn and take part where there’s a good match

Once an early progress plan firms up, you can continue working backwards: begin the process of creating an onboarding plan to help them get there, some prerequisites for what a person would need to have and be able to learn to get there, and defining evaluation criteria. I have an early draft of a worksheet for that planning here (letter, A4). I’ll come back to that process later. Next issue I’ll come back to this, assuming that work’s been done, and address the actual question, how to put that information into a job advertisement.

Until then, on to the roundup!

Managing Teams

Good Managers Matter a Lot! (Twitter Thread) - Ethan Mollick

What you do matters, and there’s a reason that my priority right now is to focus on front-line managers. Here’s a twitter thread by Mollick, a professor in the Wharton School at Penn State, highlighting some research results. The ones that stood out to me because they were more about front-line managers:

- The difference in quality among managers in the game industry at EA Games accounted for 22% of the variation in game revenue

- Replacing a bottom-quartile plant manager in a car factory by a top-quartile manager results in a decrease in hours needed to build a car by 30% (note that’s hours needed - this isn’t just getting people to work harder)

- Replacing a bottom 10% manager with a top-10% manager drops costs by 5% just by lowering the number of people that leave the team.

The experts in our teams doing the hands-on work deserve to be supported, have opportunities to grow, and have good working relationships with their peers and their mangers. The research we support deserves that 22% or 30% boost in productivity that comes from a well-functioning team. (Honestly, I’ve been in dysfunctional teams and healthy ones, and 22-30% seems small). And I’m tired of a research ecosystem which doesn’t value the value of managers, the effort they put in, and the stress they’re put under by not being given support.

(And, because I’ll get questions otherwise: the rate of people leaving a team shouldn’t be zero, change is good and healthy, for the individuals and for the team! But good team members should leave because they have fantastic new opportunities that will grow them in new ways, not because they feel under-supported and trapped with no input or career path).

(Somewhat relatedly, also via Mollick - toxic workers cost a team more than twice as much as hiring a superstar benefits a team)

Don’t Shy Away or Risk Cowardly Management - Aviv Ben-Yosef

Let’s start with a simple test for how you can self-assess whether you are being too shy. If you finish most workdays with a weighing down feeling about an issue that you have not spoken to the relevant person about.

I’d prefer the word “timid” to “shy” above, but Ben-Yosef is exactly right. Tech and academia have a lot of the same interpersonal issues:

I’m not a big fan of stereotypes, but there’s no denying that a lot of leaders in tech are former engineers–a group no one would claim is known for being overly talkative. Providing frank feedback about technical matters is one thing, but saying what’s really on your mind, especially when it comes to personal matters, is something that you might find quite a bit harder.

Not giving a team member critical feedback is a small act of cowardice. An understandable one — we've all been there — but still. Your team members deserve to know when they’re not meeting expectations. You’d want to know from your boss! You’d be mortified to hear that they had been grumbling to themselves about something while not telling you about it. Give timely feedback: respectfully, kindly, but firmly. Feedback is a gift.

Technical Leadership

Realistic engineering hiring assessments - Tapioca

Explicitly testing for needed skills is an important tool to have in the toolbox as you’re constructing a hiring process. For technical staff, that would be some kind of technical test - it could be having people walk through code, review code or architectural choices, or some kind of take-home assignment.

There are real downsides to take-homes! Many potentially excellent candidates don’t have 2-3 hours per job application to spend in their off time doing work for a job offer that might never come. For technical staff who generally don’t need to “perform on command” in front of an audience, there are also real downsides to doing it live in front of an audience (many people, especially those in groups that are not overrepresented in our fields, demonstrate less confidence in those situations and so are graded much worse), and real downsides to having people demonstrate a code/architecture portfolio (lots of people don’t code in their spare time and are not at liberty to show their work from previous jobs).

Increasingly I’m coming down on the side of offering candidates a choice between options, and having one of those options be a short take home (replacing, rather than in addition to, the equivalent number of hours of interview process). Tapioca (which, to be clear, sells a platform for running take home exercises at significant scale) has a curated list of ranked take home problems, if you need inspiration. To my mind, many of these are too long to be justified (although they might be excellent discussion problems along the lines of “think about how you might do this and the tradeoffs of different approaches, and we’ll discuss it in the interview”). But they cover a wide range of skills and approaches.

Managing Your Own Career

Being-Doing Balance over Work-Life Balance - Jean Hsu

I’m very cautious about sharing traditional productivity stuff here, because (a) efficiently doing the wrong things doesn’t help anyone, and (b) lots of “productivity hacks” work great for some people while not working for most.

But also there’s a bit of a reverence for being productive (or worse) being busy in our line of work that can be deeply unhealthy, but is also pretty seductive for those of us who get into this work because we’re high achievers.

Hsu’s distinction here I think is right; “Being-Doing” is a more useful mental model than “Work-Life”. If you’re spending your days depleted because you feel the need to constantly be accomplishing things at work and then you spend an equal amount of time just as driven to accomplish things at home, that’s not great, even though work and life are balanced. We need to find some time to just be, without any accomplishment at stake. That can come from coffee with workmates, family time, honing a craft for its own sake, hobbies, and any of a number of other things that work for us.

Product Management and Working with Research Communities

SQLite: Past, Present, and Future (PDF) - Gaffney et al, VLDB ’22

Business school students learn a lot of strategy through case studies, a form of education we generally don’t get in STEM fields. So I like to read (and include here) case studies of products relevant to our line of work. Reading them helps us understand a successful product teams thinking, what they’ve optimized for and why, and how they make their decisions.

(Someday I’d very much like to assemble a compendium of case studies of strategy for various research computing and data teams. Not finished strategic planning documents, which aren’t useful outside of the team’s immediate community, but how and why the choices that informed that document were made, which could be extremely instructive quite widely.)

This is a nice history of SQLite, the choices it made, and what it chose to optimize for. There’s a long, unflinching comparison to DuckDB, which absolutely smokes SQLite for analytic workloads (and in turn performs vastly worse on transactional workloads), and a discussion of what the team would and wouldn’t be willing to compromise on to improve SQLite’s analytics performance.

Another article on how hard it is to find postdocs this year, this time in Nature.

I sympathize with the PIs in this situation, many of whom are junior faculty struggling to establish themselves. But I’m not sorry this is happening.

Every week researching the job board I see dozens of postdoctoral postings that should clearly be staff jobs. Instead they are precarious year-to-year positions with poor pay and worse benefits. That was sustainable as long as postdoctoral candidates were willing to sustain it in exchange for possible future faculty positions. That willingness has clearly changed. Time will tell if it has changed permanently.

Research Software Development

About Scientific Code Review - Fred Hutch Data Science Lab

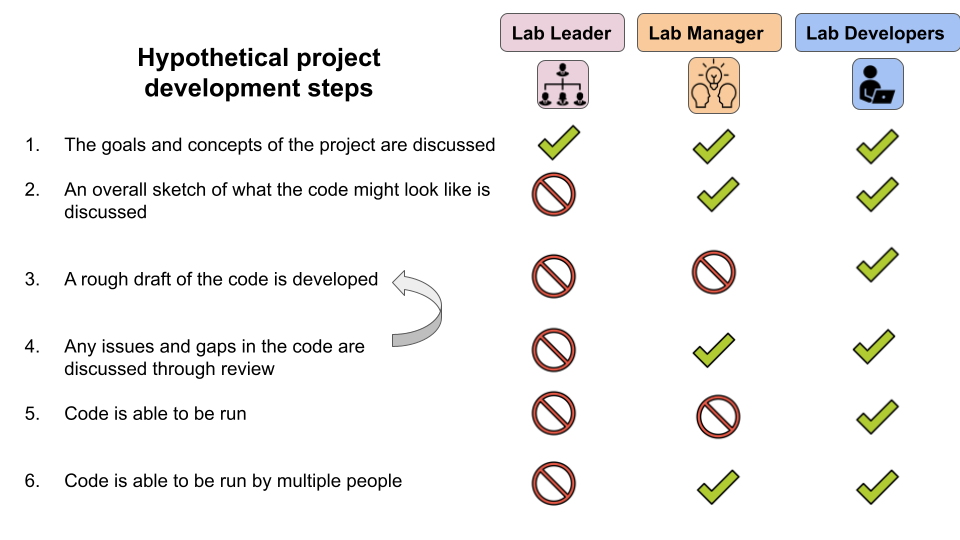

Here’s a good introductory overview of code review for science (and in particular data work) by the Fred Hutch Data science lab. It’s a great resource for teams getting started, whether a staff team or maybe a researchers group you’re working with. One thing I like this is that it breaks the various tasks up between roles - developers, lab managers/team leads, and lab leaders. Groups in different situations may have a slightly different assignment of tasks to roles, but it’s nice to see the roles clearly distinguished. Then there

Groups getting started in code review might also be interested in an upcoming (Oct 12) webinar hosted by the Exascale computing project entitled “Investing in code reviews for better research software”.

The Australian Research Data Commons is sponsoring an Australian science award recognizing research software! Let’s see other groups follow suit.

Research Data Management and Analysis

So! You want to search all the public metagenomes with a genome sequence! - C. Titus Brown

Brown talks about exciting work by Luiz Irber to enable similarity searches across metagenomes, extremely complex and large data objects hundreds to millions of times the size of a genome. This is an extremely useful scientific capability, but with their previous technologies it would take minutes per search.

Like the DuckDB vs SQLite comparison above, choosing the right database for the job makes a huge difference! Irber tried another implementation using RocksDB, an embedded key-value store rather than a tabular relational database, and search times dropped from minutes to seconds. This makes lots of new things possible - now the team is working on performing a sketch of local private genomic data in the browser (using webassembly, which you will know this publication is almost as big a fan of as embedded databases) and interactively query a web service.

New data types are changing research needs dramatically, and technologies previously not really useful for research computing are starting to play increasingly important roles. This is an extremely exciting time to be supporting this kind of work!

(Beating one of my drums for the umpteenth time - is this a software development project? A systems project, for the hosted service? A data management and analysis project? Like an increasingly large fraction of all impactful research computing and data efforts, it doesn’t fit into one silo or another - it’s combines the contents of two or more. While the categories may work ok as section headings for a weekly newsletter, dividing our community into research software development or research systems or research data is actively counterproductive).

Meta’s Velox Means Database Performance is Not Subject to Interpretation - Timothy Prickett Morgan, The Next Platform

Data lakes are increasingly important for data-intensive research computing teams in a way that seemed extremely unlikely even ten years ago. That means “federating” data across multiple data sources. Those doing that work may be interested in another VLDB paper Morgan summarizes, describing Velox which is a uniform high-performance (C++) query execution engine being tied into multiple data systems:

Velox is currently integrated or being integrated with more than a dozen data systems at Meta, including analytical query engines such as Presto and Spark, stream processing platforms, message buses and data warehouse ingestion infrastructure, machine learning systems for feature engineering and data preprocessing (PyTorch), and more.

Emerging Technologies and Practices

Paving the way for a coordinated national infrastructure for sensitive data research - DARE UK

As research computing and data continues to expand beyond the basic research and the physical sciences, environments and methods for responsibly handling sensitive data in a trusted manner is becomign more important. Here’s a report of phase one of the UKRI “Data and Analytics Research Environments” (DARE) program, which taught to identify key challenges and needs. It offers concrete high-level recommendations on demonstrating trustworthiness, researcher accreditation and access, accreditation of the environments, data and discovery, federation of these environments, building capability and capacity, and providing funding and incentives.

Of particular note, under “Capability and Capacity”, the report emphasizes the need to focus on clear career pathways, better visibility and recognition of the technical roles, better salaries, and and excellence in training.

Random

Unless you’ve been living under a rock this week (and honestly, these days who could blame you) you’ve heard of Stable Diffusion, a publicly released image model a la DALL-E from Hugging Face that you can download and run yourself. People are doing remarkable things with it, especially with the image-to-image functionality (where the prompt includes an image). Some are helping you create animations interpolating between two text prompts, others are explaining what diffusion models are, others still are creating searchable indices of some of the training set, or getting it to run on apple silicon.

Interesting - Sioyek is an open source PDF viewer specifically for academic and technical papers, with features like a table of contents for navigation and smart links to figures, tables, and references, even if the actual PDF file doesn’t provide those things.

A very promising-looking course for getting started on GCP for bioinformatics.

A really nice visual walk through of how pointer analysis works, and in particular how the open source cclyzer+ tool works with LLVMed code.

A short handbook on writing good research code, which might be a good resource for trainees you work with.

Apparently if you’re the maintainer for software that configures monitors, you end up with a collection of all the weird bugs monitors have (and blame you for).

Naming things is hard. Introducing USB4 2.0.

Maybe relatedly, this week I found out that cram was an interesting looking tool for writing command-line test suites; and cram (no relation) is an interesting looking dotfile manager.

Claris is renaming FileMaker Pro, causing computer enthusiasts my age everywhere to cry, “wait, FileMaker is still a thing?”

Continuing the old-person computing nostalgia theme, apparently people have finally come up with a use for the Commodore 64 (as opposed to the clearly superior TRS-80 Color Computer which just happens to be what I had) - turning it into a Theremin (with a nice explanation of how they work. Theremins, not C64s.)

That’s it…

And that’s it for another week. Let me know what you thought, or if you have anything you’d like to share about the newsletter or management. Just email me or reply to this newsletter if you get it in your inbox.

Have a great weekend, and good luck in the coming week with your research computing team,

Jonathan

About This Newsletter

Research computing - the intertwined streams of software development, systems, data management and analysis - is much more than technology. It’s teams, it’s communities, it’s product management - it’s people. It’s also one of the most important ways we can be supporting science, scholarship, and R&D today.

So research computing teams are too important to research to be managed poorly. But no one teaches us how to be effective managers and leaders in academia. We have an advantage, though - working in research collaborations have taught us the advanced management skills, but not the basics.

This newsletter focusses on providing new and experienced research computing and data managers the tools they need to be good managers without the stress, and to help their teams achieve great results and grow their careers.

Jobs Leading Research Computing Teams

This week’s new-listing highlights are below; the full listing of 214 jobs is, as ever, available on the job board.

Software Development Manager - Quantum Software - Xanadu, Toronto ON CA

Xanadu is looking for an experienced Software Development Manager to lead the Core Quantum Software team. The team is developing PennyLane, an open-source framework for quantum machine learning, quantum computing, and quantum chemistry. Although quantum software development experience is not required for the role, an advanced degree in physics, math or computer science is preferred.

Machine Learning Research Lead, Energy Waste - Understanding Recruitment (Recruiters), Cambridge UK

As a Machine Learning Research Lead / Manager you will be part of this company's vision to reduce energy waste; using a combination of complex machine learning algorithms, Bayesian Inference, Gaussian Process, Probabilistic/Agent-Based Modelling and Time Series Forecasting to help them achieve this goal. Realistically what we're after is a Machine Learning Research Lead / Manager who is not afraid of taking risks and who is going to push their business forward within the UK and Europe. For this position, you will primarily be working as a people manager and using your prior technical experience to drive career development in the AI team.

Engineering Manager, Learning R&D - Duolingo, Pittsburgh PA USA

The Learning R&D Area works on projects that aim to improve how well we teach languages on Duolingo. This area is responsible for the core learning experience, from optimizing and personalizing the bite-sized lessons that are iconic to Duolingo, to investing in newer features like short stories to improve reading comprehension, ways to learn new scripts and alphabets, and giving learners pronunciation feedback. You will play a significant role in making sure Duolingo delivers on its promise to teach you a language both a fun and effective way!

Senior Technical Project Manager - BenchSci, Remote CA or US or UK

We are currently seeking a Project Manager to join our growing Platform Delivery Team! As part of the job, you will drive the end-to-end delivery of OKRs and projects. Reporting directly to the Manager, Platform Delivery, you will work closely with a number of stakeholders across the organization to make sure projects are successfully delivered to our customers. You’ll have multiple projects on the go at any given time, so you should be able to easily handle competing priorities. This role will support our core infrastructure team so you should have a DevOps engineering background or experience supporting core infrastructure teams within a product environment.

Manager, Engineering Development Group - Mathworks, Nantick MA USA

Hire and lead a high performing team of engineers and computer scientists with a variety of skillsets; Support the career growth of team members through coaching, mentoring, and feedback; Enable the team to provide high quality, specialized product support to our customers while helping the team build product expertise and customer workflow knowledge; Jump in, roll up your sleeves, and take the lead to improve processes

Director of Advanced Research Computing - Cardiff University, Cardiff UK

Cardiff University has been a pioneer in the provision of supercomputing to its diverse community of researchers. The University is now seeking to appoint a forward-thinking research computing leader to shape and deliver the future of advanced research computing services to its world-class research population. The Director of Advanced Research Computing will take the lead in defining the future strategy, operating model, service offering and technology roadmap to build on Advanced Research Computing at Cardiff (ARCCA)’s reputation for high-class bespoke research computing provision.

Senior Manager, Informatics - CVS Health, Parsippany NJ USA

Responsible for the successful delivery of analyses and analytic solutions to meet business needs, interacting directly with internal and external clients and colleagues. Acts as the analytic team lead for complex projects involving multiple resources and tasks, providing individual mentoring in support of company objectives.

Senior Manager Research Computing - Boston Children's Hospital, Boston MA USA

The Senior Manager Research Computing shall be responsible for: Directing/Managing/Supervising the design, development, implementation, integration, and maintenance of research technology infrastructure and systems capabilities to support the organization's business objectives. Leading the design, implementation, and management of security systems and redundant data backups. Ensuring the development of cost-effective systems and operations to meet current and future research requirements. Overseeing the analysis of research problems and leading the evaluation, development, and recommendation of specific technology products and platforms to provide cost-effective solutions that meet business and technology requirements. Ensuring design of best-fit infrastructure, network, database, and/or security architectures.

Sr. Quantum Systems Architect - Microsoft, Redmond WA USA

As a Senior Quantum Systems Architect you will have the opportunity to: Develop new quantum applications and determine the quantum resources needed to execute them. Design the subsystems of the complex architecture of the quantum machine. Drive cross-group collaboration between hardware & software engineers, partnering closely to create industry-defining breakthroughs. Drive clarity out of ambiguity in a dynamic, developer environment.

Quantum Algorithm Scientist - Barrington James (Recruiter), Quebec QC CA

Quantum Algorithm Scientist required the first and main objectives will be to learn and develop the initial expertise while starting to support the business development actions and engage in preliminary discussions with our partners. As you will work among top-class engineers and scientists; you'll learn fast and naturally take the full dimension of the role, which is to become a key stakeholder of the quantum software community in North America. Build local expertise on quantum algorithms and work in connection with algo & use case teams to deliver near-term quantum advantage to our partners. Launch, lead, or supervise new application projects with partners (industry or academics), building new use cases using our most promising algorithms

Data Architect - The Health Foundation, London UK

The Data Architect will work in the Improvement Analytics Unit (IAU) which is part of the Data Analytics team. The team conducts high-quality, in-house research and analysis, and collaborates with the NHS to develop approaches to improve health care that can be applied at local and national levels. Specifically, the successful candidate will support the IAU, a joint unit with NHS England that provides rapid feedback about the effects of new models of care and develops robust approaches to data management and information governance, applying analytics directly to real-world problems.

Senior Data Science Manager, Urban Big Data Centre - University of Glasgow, Glasgow UK

You will provide strategic leadership in data engineering, data science, research computing technology, and information governance for a centre which is pioneering developments in urban analytics and computational social science. You will be responsible for developing, managing, and implementing research computing, data, and information services within UBDC. You will lead engagement with key internal and external stakeholders including data and technology service providers, UKRI and other funders, government partners, and College and University service and senior management.

Lead Bioinformatics Analyst - St Jude Children's Hospital, Memphis TN USA

You will work closely with research team members to analyze large-scale genomic datasets including WES, WGS, bulk and single cell RNA-seq, Cut&Run and ATAC-seq data, to identify novel cancer predisposing germline variants and interrogate the impacts of these variants on downstream gene function. You will develop, improve, modify, and operate data analysis pipelines, generate and provide analysis results and reports, and perform requested custom analyses.

Data Architect - University of Sydney, Sydney AU

The Data Architect acts as the subject matter expert to lead initiatives that support delivery of data to empower university staff to enable impactful teaching and research, drive sustainable performance, and deliver outstanding student experiences. This is a key role in developing strong stakeholder relationships to understand opportunities and challenges and support the University in implementing a Data Strategy that will enable data to be used to suit a broad range of purposes.

Manager, Statistics/Data Science - Pfizer, Groton CT or Lake Forest IL USA

You will design, plan and execute statistical components of plans for development and manufacturing projects. This will assist in establishing the conditions essential for determining safety, efficacy, and marketability of pharmaceutical products.

Data Science Manager – Research and Productization of ML/AI & Analytic Automation Capabilities - Deloitte, Various USA

This is a high-profile role in a well-funded team. You will have ownership of the directed research and productization of transformational ML/AI capabilities for large clients. You will combine leading ML/AI open source libraries and techniques to prototype the intelligent automation of cross-channel communications for large (multi-terabyte) clients. The data engineering team is then responsible for the coding of your team’s proven prototypes into enterprise grade products for our large clients. We have a great team of machine learning experts, data scientists, infrastructure engineers and technical writers, all of whom are committed to delivering first class software for downstream clients.

Operations Manager, Research and High-Performance Computing Support - McMaster University, Hamilton ON CA

We are seeking candidates for the Operations Manager position within the Research and High-Performance Computing Support (RHPCS) unit. As part of the Office of the Vice-President, Research, RHPCS supports the high-performance and advanced research computing needs of McMaster's diverse research community. Reporting to the Operations Director and supported by other members of the Operations team, the Operations Manager provides day to day operational leadership of the activities within RHPCS. The Operations Manager plays an integral role within RHPCS, with responsibilities that include budget development, the management of human and physical resources, client-relationships, and financial and administrative matters.

Manager, Applied Science, AWS Braket - Amazon, Seattle WA USA

Amazon Braket is looking for Applied Scientists in quantum computing to join an exceptional team of researchers and engineers. Quantum computing is rapidly emerging from the realms of science-fiction, and our customers can the see the potential it has to address their challenges. One of our missions at AWS is to give customers access to the most innovative technology available and help them continuously reinvent their business. Quantum computing is a technology that holds promise to be transformational in many industries, and with Amazon Braket we are adding quantum computing resources to the toolkits of every researcher and developer.