Research Computing Teams #119, 23 Apr 2022

Hi!

I wanted to talk a little bit more about Research Computing and Data (RCD), how it connects to other work, and how that informs how I think about when RCD work is and isn’t a research output.

First, I want to make a claim that most readers here would likely agree with, but may not yet be universally accepted elsewhere: RCD is a discipline in and of itself. It is a body of knowledge and practice, with full time practitioners, conferences, knowledge creation, sharing, and — increasingly — degree programs.

In fact, I’d make the argument that RCD been one of the most productive and influential academic disciplines of the last several decades. And an academic discipline it is - I don’t think anyone would doubt that the development of the FFT, or finite element methods, or new methods for processing next generation sequencing data or supporting digital humanities work, are real and significant research and intellectual accomplishments.

Research Computing and Data is a bit unusual in that it’s exclusively an applied discipline. There’s no real “pure” or “basic” RCD research work. Such work would fall into the fields of computer science (which is arguably a discipline that is itself a child of RCD) or applied mathematics or maybe electrical engineering (which are parents).

Instead, RCD work is always by its nature applied to some other area. And while being an applied-only field is a bit unusual, RCD isn’t completely alone in this. Engineering or even Statistics are overwhelmingly applied fields, but few would dismiss either of them as not being real fields of human endeavour, with real research outputs.

So let’s take it as given that RCD is a real discipline. I’d claim that the nature of disciplines is that they have an R&D practice. They have a spectrum of activities — all valuable! — which range from research on one end, to product development at the other. Crucially, there’s a point - maybe ill-defined, but it exists - at which the work stops being research itself and is now product development, just as is true for chemists, or materials scientists, or economists, or those in other fields. In those cases, the work stops being the creation of new knowledge in their fields, knowledge consumed principally by fellow experts in that field, and starts being about embodying that knowledge into something others can use. That embodying work is still work that requires expertise, it’s still work that builds on the history of work in the practitioners field, but it’s development of a product for use by others that needn’t be experts in that field.

Let’s say a materials scientist is doing some work that looks like it might lead to a new kind of sensor, that could be used in new kinds of lab equipment. Initial discovery work showing that it could work as a proof of concept, or even as a prototype, probably involves a lot of research work. The primary output is new knowledge about materials science, knowledge published in journals and that other materials scientists can build on.

Let’s then say the team then contributes to work to commercialize that knowledge, to actually build the sensors that can be incorporated into new lab equipment. At that point the work stops being principally about producing new materials science knowledge and starts being about creating a new product that relies on, that contains, that embodies, that knowledge. There will be lots of careful, methodical, expert-level work done building packaging that doesn’t compromise the properties that make the material a good sensor, of building sensitive and reliable ways of measuring output from the packaged sensor. That’s excellent and important work, and will be used in lab equipment for research, but relies much more heavily now on existing best practices built up from previous product-building work. It need not and likely will not lead to the development of new materials science knowledge. It doesn’t generate materials science research outputs. That’s true even if it will be used to help support research in other fields.

This research-to-development journey doesn’t have to be strictly linear! It could be that in building the packaging new materials science knowledge is required. And to develop the next version of the sensor, it’s certainly possible that new materials science knowledge ends up being developed, generating materials science research outputs. But it’s also possible that it doesn’t, and that the work done is purely product work, developing a product rather than new materials science knowledge.

I think about research computing and data work, the work of research computing teams, the same way. Because RCD is always necessarily applied, I sometimes find it useful to think of it not as a one-dimensional research to development spectrum, but in a two-dimensional space - considering how mature the RCD work itself is, and how mature the problem is it’s being applied to are.

In the case of creating a new sensor, the problem is evolving and becoming clearer along with the product itself - the path lies more or less along the diagonal. That’s also how I feel about much of bioinformatics, a field that has grown in parallel with — both being driven by and in turn driving — developments in high-throughput molecular biology data collection. Along that diagnal there is a very exploratory phase, where you’re not even sure what questions make sense to ask, through to turning things into prototypes, through to delivering a mature product. Along that diagonal (which again needn’t be followed strictly linearly) work changes from producing mostly new RCD knowledge to packaging that knowledge into a product that non-RCD experts can use.

But there’s alternatives to developing tools that grow in maturity with the field. In the top-right corner, with new RCD solutions but mature problems, new methods can be applied to extremely mature fields — a new numerical method for fluid dynamics, for instance. Novel methods, in any field, are more or less always recognized as research outputs. In these cases “product development” is much simpler - going from that proof of concept to something in production is likely something that can happen quite quickly, as the needs of users are very well understood, and there’s probably existing tooling you can build on to deliver the new method into the hands of users really quickly. On the other hand you could build new frameworks for existing methods; that’s useful, but is less likely to be a research output, building more on software development best practices than generating new RCD knowledge.

In other corner, you have mature RCD outputs being applied to new problems. This is the work of translation; of realizing an existing method can be applied to answer new questions. That work can quite possibly generate both a new product and new RCD knowledge.

I won’t claim that this is The Answer to RCD as a research output, but it’s a robust, self-consistent and well-motivated take. I think we haven’t thought about RCD in terms of R&D mainly because up to this point, we’ve mainly been thinking of RCD solely in terms of research software development. Since we want research software by and large to be open source, a commercialization approach seems weird; and as long as grant funding rates were good, it was easier just to treat RCD products as research outputs, and get them funded and recognized by piggybacking on that approach.

But this is growing untenable, for a number of reasons:

- As research software products get more complex and more teams depend on them, the project-based approach that serves the funding of e.g. programmes of experiments becomes more and more unsuitable,

- Funding success rates are plummeting across the board anyway, and especially for any work that can be dismissed as “incremental”, such as maintenance of existing tools (this devaluing of incremental work causes problems well beyond its impacts on RCD, but that’s a whole different article),

- Research data curation and maintaining research data resources is increasingly important, and that works fits the research project mould more poorly even than research software development did,

- Research software, systems, and data are becoming more closely intertwined, it makes less sense than ever to consider them separately, and research systems are clearly mostly products.

I don’t know what the solution to any of the above is. But thinking more clearly about the purpose of our work and what success looks like, and RCD’s place as a discipline, is more likely to get us there than just continuing to hope that RCD work can be funded in the same way as doing new experiments or theoretical analyses.

Sorry, that was long! Now on to the roundup.

Managing Teams

Onboarding Can Make or Break a New Hire’s Experience - Sinazo Sibisi and Gys Kappers

I’m obviously thinking a lot about onboarding lately!

When we do hire, we often put a lot of work and effort into actually finding and hiring someone, and then the work kind of … stops. There’s not a lot of effort put into the “on ramp” to the job, getting people geared up and ready to succeed in their first three months or so. That makes the new hires unhappy - you’ve presumably made a point of hiring someone who is a high-achiever and wants to get stuff done - and it makes the other team members unhappy as it takes a long time for the new hire to come up to speed and pull their weight.

This situation is particularly dire in research computing, where we don’t get to hire very often and so any onboarding plan and materials we might have are probably way out of date. One of the reasons I really like hiring student interns year around is that it presents a huge opportunity (if taken, which I didn’t always do!) to get the hiring/onboarding/offboarding processes running really smoothly, and to improve it over time.

Sibisi and Kappers walk through their recommendations for improving onboarding. Like so much in managing, it’s not rocket science and the recommendations aren’t huge flashes of insight - it’s paying attention to the details and doing the work

- Setting goals for the onboarding process

- Collaborate across departments, units, (and I’d add stakeholders) the team member would be interacting with

- Make sure the new hire receives support consistently through the process

Mentoring Conversations: How to Be Remarkably Helpful with Limited Time - Karin Hurt, Let’s Grow Leaders

How to improve your listening skills? Here are five tips. - Laurie Brown

Honestly I’m pretty down on mentoring in this newsletter, not because it’s bad but because advice is often so cheaply given compared to (say) actively sponsoring a team member for new opportunities.

But.

If you’re deeply thinking about what your mentee wants and ask a lot of questions, particular questioning their assumptions and using something of a more coaching approach to help them find their own answers, it can be really valuable. Hurt here describes a mentoring conversation that changed her career path.

Relatedly, Brown reminds us that listening well takes a lot of effort and practice; her five steps for listening (in mentoring or other conversations) are:

- Focus

- Pay attention to their body language, emotional tenor, and to what’s not being said

- Acknowledge what they said - paraphrasing back is a very powerful tool

- Inquire to get deeper

- And then respond.

Managing Your Own Career

Reverse Interviewing Your Future Manager and Team - Gergely Orosz

There’s no perfect jobs, but there are bad jobs, and there are overall good jobs which nonetheless are a very bad match to what you personally prefer and want to be doing every day.

Once you get an offer you can start reverse-interviewing in earnest. I don’t mean the “what does success look like in this role” kind of question you ask in the last 5 minutes of a 60 minute interview slot, I mean really digging deep to figure out what working in the job would actually be like. Especially if you have a couple of offers (and todays environment is very conducive to getting multiple offers), the information uncovered here can help guide you to a choice you’ll be happy with for a few years.

Besides any specific questions that you have after thinking about the role and the organization - anything which seems odd or surprising to you - Orosz and some of his colleagues contribute some pretty good general purpose questions for the hiring manager but also senior peers you’d be working with:

- What are you most excited about for the next six months?

- What did your typical workweek look like for the past month?

- How would you describe the team dynamics

- What advice would you give the new hire to be successful?

- Hsa anyone left the team? What was the reason?

- Can you show a non-trivial code review [LJD - or migration or update or…]?

- What separates a good day from a bad day for you? (and then dig into how often the bad days occur and what causes them)

- What do you like and dislike about the manager?

- Who did they last promote on the team, and why?

- How supportive are they as a manager?

Even if the answers only solidify your decision to join the new company, they will be useful for giving you a head start, knowing better what to expect in your first months.

Product Management and Working with Research Communities

National Science Foundation Announces ACCESS Awards - Dina Meek, NCSA

ACCESS, the follow-on to XSEDE, is starting to take shape as $52 million over five years for (as I understand it) personnel and models for service delivery are awarded by NSF.

In NSFs call there were four separate tracks, for handling allocation services, for handling user services, for operations and integration between the different systems, and for monitoring and measurement services. (I have to admit, as an outsider, it’s not obvious to me why the last two would be separate). Then there’s an overall coronation office, which will be run out of NCSA.

With the awards just announced, details are sketchy - XSEDE will be active through August, so ACCESS will have several months to ramp up, and presumably we’ll learn more over the summer. But even with the press-release-level information, I’m fascinated by the most user-facing two of these: RAMPS (out of PSC) for allocation, and MATCH for user support.

RAMPS looks like there are a number of useful ideas that will come into play:

- “Establishing a hierarchy of opportunities so that smaller amounts of needed computational time will be accessible through simpler, quicker applications” - this will be better for researchers just getting started or that just don’t need lots of compute. It will be more work for the reviewers but better for researchers, which is a tradeoff we too often don’t make; and

- “Structuring the awards system so that, though users’ proposals will still be awarded based on their own merit, the NSF HPC resources will compete for users rather than the other way around.” - I REALLY want to see how this plays out. Far too often researchers basically have no choice in where they run, which means we get no “revealed preference” information about what they value. Giving researchers who don’t need highly-specialized resources “Access bucks” or whatever to spend where they want (if that’s what’s being described here, which I honestly don’t know) would be a huge shift in dynamics and would result in systems better tuned to what researchers actually value.

Do we have any readers who know more about the details of MATCH or RAMPS (or the other awardees) who are comfortable sharing some information?

As in-person conferences recommence, regional conferences of running scientific core facilities (like this one for the mid-atlantic states in the US) are beginning to start back up. The managers and staff of these core facilities have a lot of expertise in sustainably providing service offerings to research, and lots of experience in service development, service lifecycles, and business case modelling. I’m not sure I’d recommend making a trip just to attend one, but if there’s one at or near your institution it could be a useful way to network with other leaders and managers facing similar challenges as RCD teams, in a different context.

Research Software Development

Storing 64-bit unsigned integers in SQLite databases, for fun and profit - C. Titus Brown

A blog post about research software development that touches on the challenges of working on a giant pull request, using SQLite in a research software code, and discussion of tradeoffs in different approaches is, as longtime friend of the newsletter Brown must know by now, too much for me to resist including here.

In this article, Brown describes moving sourmash away from the custom-built inverted index and sequence bloom tree into using SQLite as a searchable backing store connecting kmer hashes to the sequences containing the kmers. These get quite large (4.6 billion hashes), so efficient approahes are important; but efficiency means a few things here, ideally it would be low disk usage and fast and low memory usage, and of the three likely at least one will have to give. Hashes are an interesting problem for this, too, because since they’re hashes they don’t support (say) range searches; locality doesn’t mean anything in the hashed space.

Longtime - or heck even recent! - readers will know that I think embedded databases are criminally underused in research software, and Brown too really likes SQLite in particular, so he wanted to see if SQLite could be used here. That’s not trivial because SQLite, while great, has actually pretty weak support for types, and in particular doesn’t support 64-bit unsigned ints at all. The first appraoch didn’t go well! But by understanding the structure of the data - in partiuclar since order didn’t matter with the hashes, he could type pun unsigned ints into signed ints as long as it was done consistently - he got very good results, consistent with the tradeoffs that made sense for the sourmash product (allowing more diskspace use for the index if it makes lookups faster while keeping memory useage down). There’s also some solid PRAGMA choies described for read-only workloads like this one.

It’s a good article to read if you’re thinking of taking advantage of sqlite or other embedded databases for data lookups as opposed to “rolling your own”.

EULAs Aren’t Inherently Evil - Kyle E. Mitchell

Mitchell is a tech lawyer who the newsletter has linked to in discussions of open source development in the past (such as #83 on contributor license agreements). Here he makes the case that non-open-source software isn’t (pace, say, the FSF) inherently evil.

In research computing there’s an overwhelming case for needing source to be available for simulation and data analysis methods, but not all research computing software falls into those categories, and such availability needn’t only be through the traditional open source licenses.

Until we’ve figured out a way to make the development of RCD software products sustainable through traditional funding sources in the long term, we can’t completely rule out supplementary funding models for product development like hosted software-as-a-service, selling support, or just flat out charging for software. I’m watching infrastructure projects like nextflow with interest, with a sort of “freemium” model of an open source community edition core and then Sequera Labs charging for hosting and support (and clinical labs actually prefer to pay for support for key tools). To make that work, we may have to consider a broader range of licensing models. I don’t love the idea either.

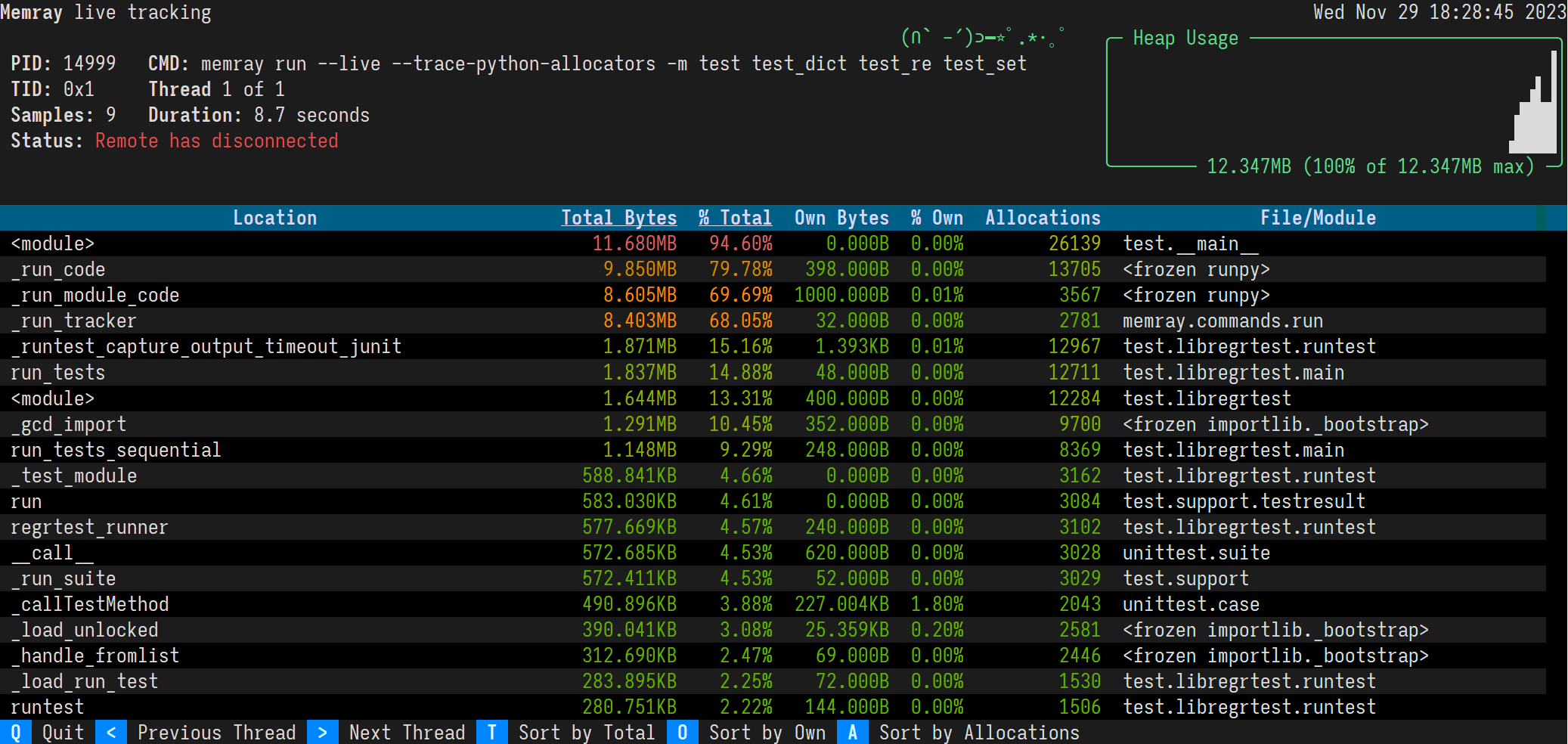

Bloomberg has open-sourced Memray, really useful looking python memory profiling tool that runs standaloneor can be queried via APIs.

Research Data Management and Analysis

Securely pushing to GitHub from a JupyterHub with gh-scoped-creds - Yuvi Panda

Potentially useful well beyond Jupyter, gh-scoped-creds is a github app which issues time-limited, repository-scoped credentials for your GitHub account that can be used to push changes in JupyterHub or on other shared systems where you might want to automate some github actions but don’t want your credentials lying around.

Push ML methods as far into the database as possible with the open-source MindsDB - I think this is an under-appreciated approach, and for some use cases will be a significant win over trying to do something clever and resource intensive with distributed systems.

An emerging light-weight stripped down alternative to elasticsearch - sonic. Cool looking, but as ever, mind how you spend those innovation tokens.

Research Computing Systems

CVE-2022-21449: Psychic Signatures in Java - Neil Madden, ForgeRock

More Java security issues, where new (Java 15+) updated and improved(!!) code for handling ECDSA signatures. Except it meant that such signatures could be trivially bypassed by the sender, with any signature being “valid” if one parameter is set to zero. That isn’t great if it’s being used for e.g. JWT validation. The bug’s been there since the start of 2020, and known since at least Nov 2021, and fixes are just being rolled out now.

Luckily I doubt most of our systems are running such up to date version of Java - i.e. Keycloak relies on Java 8 or 11.

New Ubuntu LTS just dropped. Some notable updates from my point of view is continuing mainstreaming of deep Arm support, OpenSSL moving to 3.0 which disables a lot of legacy algorithms (yay!), a move to nftables, and a major OpenLDAP update.

In the new job, I’ve been thinking about slurm installations a lot more than before, when I took it as a given. So these are new-to-me resources that might not be new to you - a docker-compose slurm “cluster” SchedMD uses for testing and training and one University of Buffalo uses that also have e.g. OpenOnDemand, OpenXDMod, and ColdFront.

Emerging Technologies and Practices

A Case For Intra-rack Resource Disaggregation in HPC - George Michelogiannakis et al, ACM Transactions on Architecture and Code Optimization

Disaggregation of systems has been coming up more and more often in the roundup (just last week in #118 as a motivation for Aquila, when talking about two testbed systems in Texas a couple weeks earlier in #116, and as a topic of more than one conference listed in January’s #105).

This paper by Michelogiannakis et al quantifies the potential usefulness on the HPC side, taking a look at real-world data from NSERC’s top-20 cpu and knights-landing Cori system, augmented with profiling data from elsewhere on GPU-heavy ML jobs. (The authors go into quite a bit of detail in the paper - it’s worth reading even if you’re just interested in workload characterization of Cori. )

Anyone who’s been involved in helping researchers take advantage of large computing systems will be familiar with the basic motivating idea here. While you can often (sometimes with a lot of work!) get a simulation or data analysis code to take full advantage of a node’s cores, or its memory, or its memory bandwidth, or its network bandwidth, or its accelerators, or its local storage, pegging the needle on two or more of those resources at once is harder. Taking full advantage of all of them at once basically never happens — and if it did, it would mean the code would almost certainly have to be adjusted to be run effectively on another system that had a different mix of those resources. (This, by the way, is one why a single “utilization” number for a cluster with a mixed workload is a useless metric even if you’re inclined to believe that utilization of a scientific instrument is the sort of thing which should be a metric).

Since all of our systems are running a mix of jobs with a different profile for taking advantage of those resources, there’s no real “one-size-fits-all” best node size. What if you could “mix and match” within a rack (say), taking some unused cores or memory or GPU from one node and distributing them virtually to another node where they could be used?

In this paper, the authors look at how Cori is actually used, and find that if there were perfectly efficient ways of distributing the disaggregated resources at the rack level, they could cut back on memory or network bandwidth by 5 to 69% per rack and still satisfy worst-case average needs within a rack for their jobs. That would would free up resources to be used elsewhere - maybe in more racks, maybe by hiring more staff - and also can be used to give rough and ready potential benefits to be weighed against performance and price costs of emerging disaggregation technologies.

“Smarter” NICs for faster molecular dynamics: a case study - S. Karamati et al, arXiv:2204.05959

(I’ll note before starting that paper describes work using NVIDIA SmartNICs/DPUs. The kinds of things the authors here are trying to do to take advantage of smarter programmable network cards apply more generally to this class of device, though, and the results here are sort of mixed and took a lot of work - it’s far from an advert for these cards! So I’m pretty comfortable including this article in the roundup.)

Here Karamati et al try various approaches to improve the performance of a simple application using SmartNICs. The application they work with is MiniMD, a simple sample application for molecular dynamics demonstrating patterns broadly similar to the much more complex and powerful LAMMPS code. MiniMD’s main purpose is to be a testbed for playing with various parallelization and programming approaches.

MiniMD is an interesting choice from among the Mantevo mini-codes because it isn’t principally communications bound (from the github repo: “Any reasonable parallel computer should be able to achieve excellent scaled speedup (weak scaling)”). It also has pretty a pretty serial per-node compute-and-then-communicate structure which makes overlapping communication and computation tricky. Finally, there’s not a lot of collective operations (except for an all gather) in MiniMD. So it’s not obvious that putting more smarts in the network card is a route to improving performance of this code.

Sure enough, they don’t get any speedup from the code as it is. On the other hand, by restructuring the code to separate out ‘maintenance’ work (updating neighbour lists) from the main computation loops, thus increasing the task parallelism and giving the Arm cores in the NIC something useful to do in parallel as a coprocessor, they improve performance by up to 20% (while increasing power consumption only 6-13% because of the lower power consumption of the Arm cores now enlisted in the work).

The authors make the comparison to other kinds of accelerators - taking advantage of them may require code rearchitecture. I’d add that once that is done, other possibilities arise - for instance, I’d be curious to see how exploiting this newly-found task parallelism might work even on purely CPU systems.

This is pretty cool - Docker now has an experimental docker sbom which will attempt to produce a software build of materials for a container image.

The singularity project reports on twitter that, thanks to work on unprivileged cgroups, Singularity CE 3.10 will let you do things like assign fractions of CPUs to containers. I know at least one group that’s playing with having jobs, or even login node sessions be encapsulated in a singularity image - this could be useful for that work, or even just as a template for using unprivileged cgroups in other contexts.

Random

The fascinating history of Lotus Improv, 1991s most innovative take on spreadsheets, with an unquestionably better underlying take on data and which equally unquestionably failed completely. (Check out the incredibly late 80s/early 90s video!)

We’re going to see some extremely cool things done with this - The General Index, a downloadable n-gram index (n ranging from 1-5) of 107,233,728 (so far) academic journal articles.

Todo lists in the editor - must we? Who cares what those weirdos do with their “org mode”? Fine, ok, whatever. Here’s Vim Tada, a todo list for vim.

Learn about assembly on the ZX spectrum.

A very complete list of terminal tricks for Mac, aimed at those new to the terminal but including some Mac specific things I hadn’t heard of.

Parsing and validating dates in Awk. Look, I’m not going to judge, I’ve done unspeakable things with sed. Think of it as harm reduction, keeping the kids from getting into perl.

Pre-commit hooks for avoiding accidentally committing secrets to your git repos - ripsecrets.

A student license for OpenVMS on windows.

A lovely interactive visual tutorial on different approaches to rotations in 3D, and maybe the only quaternion explanation you’ll ever see featuring an adorable cartoon cow.

A promising-looking logging SSH gateway for bastion hosts - warpgate.

rqlite is a distributed relational database built atop of of sqlite. It stood up to Jepsen-style testing which is no small thing.

I’m not sure that implementing a message queue in the shell is a good idea, but it makes for a good tutorial introduction to the topic.

I wouldn’t say that compiling rust software for Windows95 is a bad idea, exactly, but I’m not sure why you’d want to.

On the other hand I’m pretty sure using dropbox as a backing store for a git repository is a bad idea, but I guess if you know the risks?

I’m absolutely certain that managing code repos from within your database is a terrible idea.

For the love of god do not implement your own linear algebra library in C.

That’s it…

And that’s it for another week. Let me know what you thought, or if you have anything you’d like to share about the newsletter or management. Just email me or reply to this newsletter if you get it in your inbox.

Have a great weekend, and good luck in the coming week with your research computing team,

Jonathan

About This Newsletter

Research computing - the intertwined streams of software development, systems, data management and analysis - is much more than technology. It’s teams, it’s communities, it’s product management - it’s people. It’s also one of the most important ways we can be supporting science, scholarship, and R&D today.

So research computing teams are too important to research to be managed poorly. But no one teaches us how to be effective managers and leaders in academia. We have an advantage, though - working in research collaborations have taught us the advanced management skills, but not the basics.

This newsletter focusses on providing new and experienced research computing and data managers the tools they need to be good managers without the stress, and to help their teams achieve great results and grow their careers.

Jobs Leading Research Computing Teams

This week’s new-listing highlights are below; the full listing of 155 jobs is, as ever, available on the job board.

Associate Managing Director, High Performance Computing Centre - Texas Tech University, Lubbock TX USA

Assists the Managing Director with the management, directing and daily operations of all High Performance Computer Center (HPCC) services and resources. Responsible for management and direction of supervised staff. Supervise daily resource operations for the HPCC. Assist, as needed, with Advising research groups on campus as to the effectiveness of HPCC resources as well as encouraging these groups to apply for national resources. Assist with budget preparation for HPCC.

Senior Technical Product Manager - Research Triangle Institute, Durhan MC USA

The Data Integration, Reporting, and Analytics (DIRA) Group in the Research Computing Division at RTI International has an opening for a Senior Technical Program Manager to provide client-facing technical leadership and management oversight to projects focused on development of cloud-based informatics workflows, high-performance computing methods, data interoperability, and analysis of complex data sets. We are seeking a highly motivated and talented leader who combines deep technical skills (in areas of data, computing, or product development) with excellent translational communication skills. The ideal candidate will be as comfortable coordinating the efforts of highly specialized colleagues as they are diving into the technical work directly. A fundamental understanding of biology, programming or analysis experience, the ability to communicate technical ideas, and demonstrated client-facing leadership experience are essential.

Machine learning / High Performance Compute Release Manager - AMD, Markham ON CA

As release program manager in AMD’s machine learning software engineering team, you will drive end-to-end delivery of leading-edge technology in high performance GPU-accelerated compute and machine learning for the Radeon Open Compute software stack. You will learn about how the power of open-source software can be applied to solve real-world problems. You will interact with product management, customers, software and hardware engineering teams, quality assurance and operations in a new and growing team.

Director, Research Security - University of Toronto, Toronto ON CA

Reporting to the Associate Vice-President, International Partnerships, the Director, Research Security is responsible for identifying, developing, and implementing strategies to meet the security objectives of the University relating to research, innovation, international and commercialization activities within the Division of the Vice-President Research & Innovation (VPRI), the Office of the Vice-President International (OVPI) and the Office of the Chief Information Security Officer (CISO). The Director, Research Security is responsible for assessing and engaging with government officials on the identification and mitigation of research security threats. The Director, Research Security will serve as a key resource and advisor to senior leadership in both central and academic divisions, for best practices and policy development regarding research security, including data governance, partnership and geopolitical risks, cybersecurity, and other activities with direction from the Research Partnership Security Working Group.

Machine Learning Engineering Lead - Borealis AI, Toronto ON CA

As a Machine Learning Engineering Lead, you’ll be responsible for owning and delivering a project end to end – everything from data pre-processing to implementing machine learning training pipelines and deploying inference in a production environment. You’ll be directly managing a team of machine learning engineers, responsible for strategic planning, execution and delivery. You will provide your team with technical leadership and mentorship. You will work along other engineering leads across Borealis AI. As a people manager you will be responsible for growing your team and fostering career growth of the engineers.

Accelerator - Data Scientist Lead - Sanofi, Gentilly FR

You are a dynamic data scientist interested in challenging the status quo to ensure development and impact of Sanofi’s AI solutions for the patients of tomorrow. You are an influencer and leader who has deployed AI/ML solutions applying state-of-the art algorithms with technically robust lifecycle management. You have a keen eye for improvement opportunities and a demonstrated ability to deliver AI/ML solutions while working across different technologies and in a cross-functional environment

Head of Data Architecture - Sanofi, Bridgewater NJ USA or Toronto ON CA or Barcelona ES

As senior member of the Digital Data team, the head of data architecture will play a hands-on leadership role to lead, develop and enhance the data architecture team. He or She will be responsible for the overall data architecture coherence and state of the art data platforms. Digital Data architecture team is in charge of setting the enterprise standards, reviewing and/or implementing solutions and services transversally in collaboration with the different data verticals (Data engineering, Data Dev Ops, visual engineering, Data Domain functions). This leader will ensure internal and external solutions are consistent to the overall strategies across Sanofi, relevant roadmaps, and internal standards where appropriate.

Principal Solutions Architect, Healthcare and Life Sciences - AWS, Remote UK

Amazon Web Services (AWS) is seeking a Life Sciences Research Computing focused Solutions Architect to work with our customers, including Biosciences and Genomics Computing customers, to craft cloud-based solutions.

As a trusted customer advocate, you will help organizations understand best practices around advanced cloud-based solutions, and how to migrate existing workloads to the cloud. You will have the opportunity to help shape and execute a strategy to build mind-share and broad use of AWS within enterprise customers. The ideal candidate must be self-motivated with a proven track record of customer obsession and delivering results. The ability to connect technology with measurable business value is critical to a solutions architect. You should also have a demonstrated ability to think strategically about business, products, and technical challenges.

Research Computing Lead - Rubius Theraputics, Cambridge MA USA

Platform Owner for the Benchling Electronic Lab Notebook system, including developing and executing against a multi-year platform roadmap to maximize the usage and value of the system, educating users on system capabilities, owning integrations with related systems, and managing the support model including the system vendor and MSP resources. IT Business Partner to the Research and Translational Medicine teams, including developing and executing against a multi-year functional roadmap to address existing pain points and develop new capabilities; and facilitating collaboration between Research + Translational Medicine and the broader IT organization in areas such as support for instrument-attached PC’s and use of cloud-based Infrastructure- and Platform-as-a-Service (IaaS and PaaS) for management of large data sets and high-performance computing.

Software Engineering Manager - Lambda, Remote USA or CA

Lead, manage, and grow the software engineering teams responsible for creating software tools that will be used by the world’s top AI research teams to manage their deep learning compute infrastructure. Work with product, design, and marketing to build simple and beautiful customer experiences. Come up with new ideas for improving our products & customer experience. We want self-starters who want to make big contributions to Lambda’s technical strategy. Experience with high performance computing or managing large fleets of servers.

Scientific Software Engineer – Hydrological Forecasts - ECNWF, Reading UK

The successful candidate will take the lead in source-code management and release management of the CEMS-Flood system, and be responsible for technical testing, unit testing, continuous integration, and ensuring software quality is maintained . A particular responsibility will be to improve the design of the CEMS-Flood system using a shared software framework, working closely with the rest of the IFS Section and colleagues in the Production Services, Evaluation and Development Sections in the Forecast department. This will enable scientists, software developers and analysts to easily configure and run the system, and a smoother, better tested research to operations (and operations to research) transition of changes.

Software Development Engineer, Quantum Computing, Amazon Braket - AWS, Seattle WA USA

We are looking for Software Development Engineers to build upon one of AWS’s newest services. You will define, develop, and deploy solutions to make customer experimentation easier and more robust, and you’ll do this through inventing and evolving technologies foundational to quantum computing cloud infrastructure. You must enjoy working on complex computer science problems and thrive in a fast-paced and uncertain market. You are familiar with AWS tools and services, and best practices governing their use.

Senior Research Infrastructure Developer - University College London, London UK

The UCL Centre for Advanced Research Computing (ARC) is UCL’s new institute for infrastructure and innovation in digital research - the supercomputers, datasets, software and people that make computational science and digital scholarship possible. This Senior Research Infrastructure Developer role will have a particular responsibility for designing, building, and supporting infrastructure and services for storing and managing data and metadata. Existing services cover a range of functions, from multi-petabyte on-site storage systems supporting High-Performance Computing and longer-term data management, through cloud-hosted research output publishing systems, to systems to directly support researchers in their work such as the recently-launched Electronic Research Notebook.

Team Lead - Research Computing Services - University of Leicester, Leicester UK

As part of our broader investment in our Digital Strategy, we are looking to recruit a Team Lead for our Research Computing Services team, where you will be responsible for designing, maintaining and optimising our research technology infrastructure and services. This includes our High-Performance Computing services and research data storage, used to enable our computing intensive research and teaching. You will also have a critical role in developing and implementing new services that enable researchers to exploit public cloud services (Microsoft Azure), delivering the technology components of a Trusted Research Environment.

Director AI/ML Engineer for Computer Vision - GSK, Brentford UK

We’re looking for a Director AI/ML Engineer for Computer Vision to help us make this vision a reality. Competitive candidates will have a track record in developing SOTA deep learning models for solving challenging real world computer vision problems. They should be an outstanding scientist with in-depth knowledge in modern machine learning, as well as classical computer vision technique and software libraries.

Senior Engineer, Computational Policy Lab - Stanford University, Stanford CA USA

The position involves working with a team of data scientists and engineers to examine pressing issues in public policy. Initial projects include designing algorithmic tools to help judges make pretrial bail decisions, creating a system for prosecutors to decide which cases to charge, and building a dashboard to monitor key outcomes in municipal jails. Other projects include investigating potential bias in police interactions with the public, and examining the application and effects of sentencing enhancements. Future projects may span diverse policy areas including education, health, and voting rights.

Technical Director - AI for Mission - Carnegie Mellon University SEI, Philidelphia PA or Arlington VA or remote USA

As Technical Director - Head of AI for Mission on our team, you will lead, shape, and direct our mission-focused, applied AI prototyping and development portfolio in support of critical U.S. government needs. As an executive-level member of our team, you will also contribute to the overall vision, strategy, and direction of the division and the SEI.