Research Computing Teams #105, 16 Jan 2022

Hi everyone - Happy New Year!

Here at RCT HQ, we’re slowly getting back into the swing of things after a long and relaxing holiday break - I hope it was relaxing and refreshing for you, your close ones, and your teams as well.

Since last we spoke, the big RCT-relevant discussions online have been about the aftermath of the Log4j fiasco, which was so big and had such large impact that in the US, the Federal Trade Commission as well as the national security apparatus have gotten involved.

Leaving the security aspects aside, the fundamental issue of scandalously under-resourcing the maintenance of underpinning open-source software is one which has been brought to the forefront, with even policy makers now well aware of the problem. Useful software will get used, often in unpredictable ways - that link takes you to a Google security blog post describing how 8% of Maven Central packages use log4j, but only 1/5 of that is of direct usage, the rest being indirect use by a dependency of the package, or a dependency-of-a-dependency-of-a-dependency. But there’s no robust mechanism for making sure the foundational work that underpins the flourishing ecosystem is supported. That, coupled with the high-profile bricking of faker.js and colors.js, have made the questions of what the broader community owes to foundational software maintenance, and what (if anything!) foundational software maintainers owe to the community, a central topic.

Of course this has long been an issue and discussed, in Research Computing and Data communities and in the Open Source community in general - The New Stack had an article explaining, correctly, that Log4j is one big `I Told You So’ for open source communities - but this very high-profile issue with huge consequences, along with a number of articles on the burden of maintaining open source packages, has brought it to the forefront in a way that even decision makers who wouldn’t normally wrestle with these issues are now confronted with.

So for the coming few months, connecting the needs of supporting research computing with Log4j will be an effective way of raising the issue with certain decision makers who might otherwise not be sympathetic - or at least, may not understand first-hand the urgency of the problem. Research funders want to fund innovative and cutting-edge work, and this will be an effective shortcut for reminders about the necessary preconditions for the glamorous results.

The preconditions of course include support of the people that work on software, data management, systems for research computing and data, and one of the ways to do that is to develop and inventory career pathways for these experts. Our colleagues at the Campus Research Computing Consortium (CaRCC) Career Arcs Working Group have a 15-minute survey that they’d like as many research computing and data team members as possible to fill out describing their career arc to date and what they’re looking for in next steps; filling that out will help inform the development of career pathways at member (and other) organizations. It would help if you could fill it out; in this newsletter we focus on supporting those of us in or considering moving into leadership and management roles in Research Computing and Data (RCD), and it would be important to have that perspective reflected in the surveys, but all pathways of interest really need to be highlighted.

With that, on to the round up! I’ve highlighted a few of the most interesting articles of the past weeks below with pretty short summaries.

Managing Teams

Why We Need to Think of the Office as a Tool, with Very Specific Uses - Tsedal Neeley and Adi Ignatius, HBR

As we get ready to enter the third calendar year of the COVID-19 pandemic, it’s pretty clear that research computing teams’ relationship to the office and campus has changed in a way that will stay changed for the rest of our careers.

That doesn’t mean that a shared office has no place in our plans any more - and certainly campus matters! - but I think it’s pointed out to all of us that we were both leaning too heavily on being in person to avoid certain communication problems, while somehow also failing to take full advantage of the opportunities that being co-located with each other and with the broader research community provided.

This article is an interesting nuanced discussion between Neeley and Ignatius, but I think the most insightful and productive idea is right there in the title - the office is a tool, like slack or google docs or work-issued laptops, and it’s for us in our own local contexts to decide the best things to make with that tool, for our teams and for the communities we serve.

Handling Talent Fear - Aviv Ben-Yosef

With the tech hiring market red-hot, I know one manager who has exactly the talent fear Ben-Yosef describes here; they feel unwilling to be too directive or give too much feedback or coaching to their team members for fear that they leave. Yes, people can leave, and while they are on the team, it’s your duty to them and to the team as a whole to give them the feedback they need to get better and for the team to work together effectively.

If you find that challenging even in other times, in #83 we had a good article, A Manager’s Guide to Holding Your Team Accountable by Dave Bailey, about building accountability within a team, and we’ve covered a number of articles on giving clear feedback and coaching. There’s a good recent article at First Round Review by and Anita Hossain Choudhry and Mindy Zhang, The Best Managers Don’t Fix, They Coach — Four Tools to Add to Your Toolkit on coaching for addressing performance growth.

Tech 1-on-1 ideas & scripts - Rob Whelan

Here are some scripts or ideas for discussions for one-on-one meetings with team members, broken up into four categories of situations:

- Nothing from me - skip this one?

- Everything is (really) fine

- This is fine (on fire)

- Something has to change

They’re short reads but useful for clarifying your thinking before going into one-on-ones; certainly for new managers and it’s always worth refreshing on the basics.

Managers should ask for feedback - CJ Cenizal

Cenizal makes what should be an uncontroversial - but relatively uncommonly followed - point that managers should be routinely asking for input on their own behaviours and leadership from their team members. This is much more easily done if there are routine one-on-ones, if the ask for input is also routine (not necessarily every one-on-one, but frequent), and the manager has a habit of demonstrating that they take such input seriously and are comfortable talking about their weaknesses and missteps. All of this is prerequisite for getting better at being a manager, team lead, project manager, or any other kind of leader! In the article, Cenizal lays out the steps:

- Establish the fundamentals (one-on-ones, and giving feedback)

- Solicit feedback, supported by demonstrating transparency, vulnerability, and asking good questions (e.g. “what should I be doing differently”, not just “is there anything I should be doing differently” or, worse, “how am I doing”

- Accept what you hear

- Think and plan

I’d add a final step, following up with the team member. It’s important to show that you’ve taken the input and what underlies it seriously, even if you don’t adopt their proposed solution wholesale.

Even the UK Parliament and The Register are hearing that research computing and data staff are absurdly underpaid.

Managing Your Own Career

Copy the Questions, not the Answers - Jessica Joy Kerr

When getting advice from others - about your job, or your career - people tend to jump into giving you the answers (“When I was in this situation, I did X; you should do X”) but those answers rarely translate to your situation. Kerr reminds us that you don’t want to copy others answers, but it may be very useful to copy the questions they asked themselves and their decision making process.

Navigating Your Career Towards Your Own Definition of Success - Miri Yehezkel

Your Action Plan to DRI Your Career - Cate Huston

There’s only one person in charge of your career, and that’s you - you’re the Directly Responsible Individual (DRI) in the lingo of Apple’s internal decision-making process. Huston spells out concrete steps to take in planning your next move; Yehezkel’s article talks more about figuring out what you want, and how to prepare yourself at your current position before taking those next steps.

Working with Research Communities

A collaborative GIS programming course using GitHub Classroom - Berk Anbaroğlu, Transactions in GIS

Discord For Online Instruction [twitter Thread] - Sara Madoka Currie

Two different tools for teaching are outlined here:

In the first, Anbaroğlu describes using GitHub Classroom to support a course teaching GIS programming, where students are paired up randomly to write a QGIS plugin. The paper describes the structure and pedagogy of the course, using GitHub to coordinate interactions, the steps in creating an assignment, survey responses, and student satisfaction. Incidentally the paper includes a very nice table with links to eleven different comparable GIS programming courses, if you’re interested in seeing what other courses are covering.

In the second, Currie advocates for using Discord for online teaching - the argument being that its asynchronous-first approach makes it more flexible and accessible, while still allowing synchronous (or asynchronous) video calls, screenshares, etc.

Research Software Development

Strong Showing for Julia Across HPC Platforms - Nicole Hemsoth

Comparing Julia to Performance Portable Parallel Programming Models for HPC - Wei-Chen Lin and Simon McIntosh-Smith

Automated Code Optimization with E-Graphs - Alessandro Cheli, Christopher Rackauckas

I got turned off of Julia in its first few years by a series of erratic product management decisions, but the language and ecosystem seems to have stabilized and continues to evolve.

In the paper by Lin and Macintosh-Smith, described by Hemsoth, Julia acquits itself honourably against OpenMP, Kokkos, OpenCL, CUDA, oneAPI, and SYCL in the case of a memory-bound and compute bound mini application, across a number of different systems (Intel, AMD, Marvell, Apple, and Fujitsu CPUs, and NVIDIA & AMD GPUs). It did pretty well for the memory-bound case (although it was 40% slower on the AMD GPUs); it did a bit less well on the compute-bound kernel, as it couldn’t support AVX512 on x86, and on the Fujitsu A64X it couldn’t reach high single-precision floating point performance. Still, it’s solid results against carefully written specialized code.

And there are cool things ahead - Julia’s Lisp-like code-as-data makes it very easy to write domain-specific languages in, or use for symbolic programming, or do custom run-time rewriting of code. It’s that that Cheli and Rackauckas write about in their paper (and is described accessibly in this twitter thread). The same rewriting tools that other application areas are applying to symbolic computation in Julia can increasingly be used on Julia code in JIT compilation, allowing for very cool optimizations - such as being able to flag that reduced accuracy is ok in a specific region of code, or specifying known invariants or constraints in a piece of code that a static analysis wouldn’t be able to identify.

An Honest Comparison of VS Code vs JetBrains - 5 Points - Jeremy Liu

This topic actually came up this week at work - the inevitable “just got a new computer, what should I install” question. This is a quick overview of VSCode vs JetBrains, and Liu’s points are consistent with my experience of the two ecosystems - basically, VSCode is an editor with IDE features and an enormous third party package ecosystem of integrations, and JetBrains products are full fledged (and so slower to start up) IDEs for particular programming languages. If you need sophisticated refactoring capabilities and super easy debugging in the set of programming languages JetBrains supports, it’s going to be hard to beat that with VSCode. On the other hand if you need a fast editor with a wide range of capabilities and tools for supporting just about any programming language anyone uses, JetBrains tools are going to seem a little clunky and limited.

Hermit manages isolated, self-bootstrapping sets of tools in software projects - The Hermit Project

This is somewhat interesting - Hermit is a Linux/Mac package manager of sorts (think homebrew, but with conda-like environments) but targeted specifically at providing isolated, self-bootstrapping, reproducible dependencies for a software project, for development, packaging, or CI/CD. For research software, which often “lies fallow” for a time between bursts of development effort, this could be of particular use. Integration into the SDKs of Python, Rust, Go, Node.js and Java amongst others are supported.

A reminder that Python3.6 is now officially past end-of-life.

Research Data Management and Analysis

Don’t Make Data Scientists Do Scrum - Sophia Yang, Towards Data Science

On the one hand, research computing and data projects, especially the intermediate parts between “will this even work” and “put this into production”, often map pretty well to agile approaches - you can’t waterfall your way to research and discovery.

On the other hand, both the most uncertain (“Will this approach even work?”) and the most certain (“Let’s install this new cluster”) components are awkward fits to most agile frameworks, even if in partially different ways. The most uncertain parts are basically 100% research spikes, which short-circuit the usual agile approach; the most certain parts you don’t want a lot of pivoting around. And both ends of the spectrum benefit from some up-front planning.

Here Yang, who’s both a data scientist and a certified scrum master, argues against using scrum to organize data scientists, whose work is generally firmly on the “uncertain” side of the spectrum.

The argument is:

- You need flexibility

- Data scientists do not work on a single “product”, and work is often not an increment

- Data scientists do not always need a product owner, and..

- taking the ownership away from the data scientist is often not helpful

- The definition of “Done” varies across projects

- Sprint review doesn’t work

This doesn’t mean agile approaches aren’t useful, but they need some grounding in the nature of these more research-y efforts. This is true of some research software development efforts, too. Models like the Team Data Science Process or CRISP-DM or the like are worth investigating - not necessarily for verbatim adoption for a research process, but for getting a bit more nuance and structure.

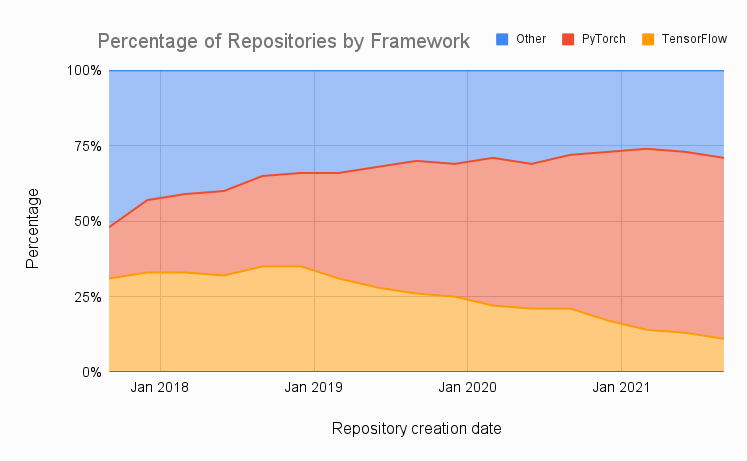

PyTorch vs TensorFlow in 2022 - Ryan O’Connor, AssemblyAI

Well, if we’re brave enough to wade into editor/IDE debates in Research Software, why not cover PyTorch vs TensorFlow as well?

O’Connor examines PyTorch vs Tensor Flow in three dimensions

- Model (and paper) availability - PyTorch pretty decisively. There’s just way more models available for PyTorch, and papers with code submissions favour PyTorch 60:11.

- Deployment - TensorFlow has very robust deployment options in Serving and TFLite; PyTorch’s PyTorchLive and TorchServe are either limited or just beginning.

- Ecosystems - TensorFlow has a stronger all-around ecosystem, but not overwhelmingly so

O’Connor’s recommendation is that, if you aren’t already productively using one or the other, PyTorch is probably stronger for ML research unless you’re specifically using reinforcement learning, and that there are other frameworks of interest like JAX. For teaching, if models are the focus, PyTorch makes sense, while if software development around ML is the focus, TensorFlow makes the most sense. In Industry, he recommends TensorFlow unless you’re focussing on mobile applications where PyTorch Live is quite strong.

What would it take to recreate dplyr in python? - Michael Chow

Heck, let’s do R vs Python, too!

I don’t love R as a programming language, but it’s inarguably an amazing and productive environment for interactive data analysis. Compared to Python’s pandas-based ecosystem, it has fairly dumb data frames and puts the smarts in the processing tools, like the dplyr library, which lets you pipeline data operations in a functional-programming like way on the data frames. This approach is great interactively, easier to understand (at least for me), and can lead to real performance wins on large data sets with complex pipelines, at the cost of some other kinds of operations like data lookups.

This is a great article covering the differences, some of the costs of pandas’ approach (index copying, type conversions, non-composable grouped operations), and what it would take to allow dplyr-type pipelined operations in Pandas. He does this in the context of an attempted implementation of some of that functionality in the siuba package.

dsq: Commandline tool for running SQL queries against JSON, CSV, Excel, Parquet, and more - Phil Eaton, DataStation

Bashing JSON into Shape with SQLite - Xi Iaso

Ever wish you could run SQL on a JSON file, or JOIN a JSON file to a CSV?

There are an increasing number of these “sql on non-database-data-files” tools around now, and it’s great - it allows rapid and uniform(ish) analysis across datasets with modest amounts of data munging, and the SQL interface allows a nice on-ramp to eventually getting the data into a database.

I haven’t used dsq yet, but DataStation just open sourced it; it’s based on SQLite and the range of data file types supported (including currently nginx and apache log files) seems really handy.

Relatedly, since dsq makes use of SQLite, Iaso describes the use of SQLite’s JSON capabilities, JSON functions, and SQLite triggers to automate the process of munging JSON.

Research Computing Systems

New Tool: Mess with DNS! - Julia Evans

Mess with DNS - Julia Evans and Marie Claire LeBlanc Flanagan

Here Evans outlines a terrific tool she put together for teaching and experimenting with DNS. Uniquely, as far as I know, it gives you a real subdomain on a real DNS server, shows a live stream of incoming DNS queries, lets you experiment in any way you like with (with two static IP addresses provided to use), and also has a set of increasingly sophisticated experiments you can walk through. The first link is the blog post where she describes both the tool and the process of building it, including tool choices; the second is the tool itself.

Publication of the Trusted CI Guide to Securing Scientific Software - Trusted CI

The Trusted CyberInfrastructure project has released its report and now guide into securing scientific software - and to some extent the systems they run on.

The guide covers the usual topics, but with specific focus on scientific computing: “social engineering”, classic software exploits such as you’d see on OWASP’s top 10 (injection attacks, buffer overflows, improper use of permissions, brute force, software supply chain) and network attacks (replays, passwords, sniffing), and gives guides on training, governance, analysis tools, vulnerability management, and using good cryptography.

For those interested in learning more, For those interested in hearing more, three’s a webinar focused on the topic of software assurance scheduled for February 28, 2022 at 10am Pacific / 1pm Eastern; free registration can be found here.

It’s Time to Talk About HPC Storage: Perspectives on the Past and Future - Bradley Settlemyer, George Amvrosiadis, Philip Carns, Robert Ross, Computing in Science & Engineering

In this open-access paper, the authors talk about the challenges of adapting HPC storage architectures to increasingly data-driven workloads, especially AI.

Developments in low-latency RDMA networks and NVMe storage help, but they argue that the storage architectures of the early 2000s still need updating, particularly the model of having a small number of big storage servers. And providing secure access while driving down latencies is genuinely challenging.

They argue for new approaches focusing on the entire data path - zoned data storage rather than block models, more distributed data, better use abstractions, and a revitalized HPC storage research community.

Kyoto University loses 77TB of supercomputer data after buggy update to HPE backup program - Dan Swinhoe, Data Center Dynamics

I think we all read about and winced at this one - an HPE software update deleted 77TB of data from backups, as correct “delete log files after 10 days” behaviour interacted with incorrect “update a running bash script which changed how variables were handled, resulting in undefined variables” behaviour. Worth considering as a tabletop exercise - if something like this happened, how long would it take for your systems team to discover it? What would be the next steps?

Emerging Technologies and Practices

Run a Google Kubernetes Engine Cluster for Under $25/Month - Eric Murphy, The New Stack

If you want to play with a “real” Kubernetes deploy (e.g. not just on your workstation) without tying up local resources, Murphy describes running a single GKE cluster on Google cloud with 3 nodes, 6 cores, 24 GB ram, enough for a modest web application or CI/CD pipeline or the like, for under $1 USD/day by relying on spot instances and cheap regions but not requiring expiring free tiers (except for the GKE control plane, which is free for one cluster but not if you use more than one in the same account). Murphy provides a Terraform config in a GitHub repo.

AI makes it possible to simulate 25 billion water molecules at once - Matthew Sparkes, New Scientist

Extending the limit of molecular dynamics with ab initio accuracy to 10 billion atoms - Zhuoqiang Guo et al, ArXiv

Using AI-trained kernels for traditional HPC simulations continues to show a lot of progress; here are two related works demonstrating the use of deep-learning trained systems to model larger systems faster while still being recognizable as MD simulations.

Events: Conferences, Training

If you have, or are thinking of starting, a practice of having team members who attend trainings come back and share what they’ve learned, the article Different ways to share learnings from a training event by Richard McLean may be of interest. There’s a lot to be said about having such a practice - it’s a good way to kick-start other knowledge-sharing practices within or across teams, and also just a good way to get people to practice giving educational presentations or training in front of a friendly audience.

The International Conference on High Performance Computing in Asia-Pacific Region (HPC Asia 2022) - 12-14 Jan, online, Free

This three day free online conference includes technical talks on very large scale simulations, algorithms for simulations, system software for machine learning, performance evaluations, and workshops on resource disaggregation, ARM in HPC, and multi-physics problems.

1st International Workshop on Resource Disaggregation, in High Performance Computing (Asia Edition) - 14 Jan, Online

Held in conjunction with HPC Asia‘22 above, this first workshop on disaggregated resources in HPC covers current (CXL) and emerging (photonics) technologies for disaggregated/composable computing.

Fundamentals of Accelerated Computing with CUDA C++ - 14-15 Feb, 13:00-17:30 EST, Virtual

The Molecular Sciences Software Institute (MolSSI) and NVIDIA have a series of limited-registration hands-on workshops - the next workshop with attendance still open is CUDA C++, and a following one in March covers scaling CUDA C++ applications to multiple nodes.

Fortran for Scientific Computing - 21-25 Feb, Online, EU-friendly timezones, €30-360, Registration due 23 Jan

Topics covered include

- Fortran syntax overview

- Basics - Program units - Dynamic data - IO

- Obsolete features of Fortran

- Fortran intrinsics

- Multiple source files

- Optimisation of single processor performance

- Features of Fortran 2003 and 2008

- Coarray Fortran

- Compilers for Fortran and their usage

Argonne Training Program on Extreme Scale Computing - 31 Jul-12 Aug, Chicago area, Applications for registration due 1 Mar

Argonne’s extreme-scale computing training registration is open; due to limited enrolment, attendance is competitive; all costs are covered for successful applicants.

Calls for Submissions

A lot of CFPs have come out over the holidays:

12th International Workshop on Accellerators and Hybrid Emerging Systems (AsHES 2022) - Colocated with IPDPS2022, 30 May - 2 June, Lyon, Papers due 30 Jan

Full (8-10pp) or short (4pp) papers are solicited for applications/use cases, heterogeneous computing at the edge, programming models, optimization techniques, compilers and runtimes, system software, and more.

4th Workshop on Benchmarking in the Data Center: Expanding to the Cloud - 2-3 April, Seoul, Papers due 31 Jan

Held as part of Principles and Practices of Parallel Programming 2022, 8-page papers on benchmarking systems are welcome, especially in areas of:

- Multi-, many-core CPUs, GPUs, hybrid system evaluation

- Performance, power, efficiency, and cost analysis

- HPC, data center, and cloud workloads and benchmarks

- System, workload, and workflow configuration and optimization

27th International Workshop on High-Level Parallel Programming Models and Supportive Environments (HIPS’22), 30 May, Lyon, papers due 28 Jan

Held as part of IPDPS 2022, this topic covers high-level programming for HPC-type applications, including high-level and domain-specific programming languages, compilers, runtimes, monitoring and modelling tools, etc.

DOE Monterey Data Workshop 2022 - Convergence of HPC & AI - 20-21 Apr, Berkeley and Online, session proposals due 31 Jan

From the call:

The meeting organizers are seeking speakers to give short (nominally 20 minutes) talks on progress, ideas, and/or challenges on AI/ML. We encourage talks from early career scientists and are prioritizing talks in the following topical areas: Scalable and productive computing systems for AI, Interpretable, robust, science-informed AI methods, Novel scientific AI applications at large scale, AI for self-driving scientific facilities

ExSAIS 2022: Workshop on Extreme Scaling of AI for Science, 3 June, Lyon, Papers due 1 Feb

Also part of IPDPS, this workshop focusses on extreme-scale AI for science. Algorithms, tools, methods, and application case studies are of interest.

36th ACM International Conference on Supercomputing (ICS) - 27-30 June, Virtual, Papers due 4 Feb

From the call,

“Papers are solicited on all aspects of the architecture, software, and applications of high-performance computing systems of all scales (from chips to supercomputing systems), including but not limited to:

- Processor, memory, storage, accelerator and interconnect architectures , including architectures based on future and emerging hardware (e.g. quantum, photonic, neuromorphic).

- Programming languages, paradigms and execution models, including domain-specific languages and scientific problem-solving software environments.

- Compilers, runtime systems and system software, including optimization and support for hardware resource management, energy management, fault tolerance, correctness, reproducibility, and security.

- High-performance algorithms and applications including machine learning and large-scale data analytics, as well as the implementation and deployment of algorithms and applications on large-scale systems.

- Tools for measurement, modeling, analysis and visualization of performance, energy, or other quantitative properties of high performance computing systems, including data and code visualization tools.”

34th ACM Symposium on Parallelism in Algorithms and Architectures (SPAA 2022) - 12-14 July, Philadelphia, papers due 11 Feb

“Submissions are sought in all areas of parallel algorithms and architectures, broadly construed, including both theoretical and experimental perspectives.” A subset of topics of interest include the below, but see the CFP for the full list.

- Parallel and Distributed Algorithms

- Parallel, Concurrent, and Distributed Data Structures

- Parallel and Distributed Architectures

- System Software for Parallel and Concurrent Programming

SciPy2022 - 11-17 July, Austin TX, Talk and Poster presentation abstract proposals due 11 Feb

Besides the general conference track, there are tracks in ML & Data Science, and the Data Life Cycle. There are also minisymposia in Computational Social Science & Digital Humanities, Earth/Ocean/Geo & Atmospheric sciences, Engineering, Materials & Chemistry, Physics & Astronomy, SciPy Tools, and for Maintainers (very timely).

RECOMB-seq 2022 - 22-25 May, La Jolla CA, Papers due 11 Feb

One of the premiere venues for algorithmic work in ‘omic sequencing has its CFP out - submissions (new papers, highlights, or short talks or posters) are due 11 Feb.

CCGrid-Life 2022 - Workshop on Clusters, Clouds and Grid Computing for Life Sciences - 16-19 May, Italy, Papers due 11 Feb

“This workshop aims at bringing together developers of bioinformatics and medical applications and researchers in the field of distributed IT systems.” Some relevant topics of interest are:

- Detailed application use-cases highlighting achievements and roadblocks

- Exploitation of distributed IT resources for Life Sciences, HealthCare and research applications

- Service and/or algorithm design and implementation applicable to medical and bioinformatic applications. E.g. genomics as a service, medical image as a service.

- Genomics and Molecular Structure evolution and dynamics.

- Big Medical and Bioinformatic Data applications and solutions.

Supercomputing 2022 Workshop Proposals - 13-18 Nov, Workshop Proposals due 25 Feb

With SC21 barely over (and its in-person program still somewhat controversial), it’s already time to start thinking about SC22 half-day or full-day workshop proposals.

10th International Workshop on OpenCL and SYCL - 10-12 May, Virtual, Submissions due 25 Feb

Submissions for papers, technical talks, posters, tutorials. and workshops are due 25 Feb

14th International Conference on Parallel Programming and Applied Mathematics, 11-14 Sept, Gdansk and hybrid, Papers due 6 May

Papers are welcome covering a very wide range of topics involving parallel programming for applied mathematics.

Random

You will shortly be able to use Markdown to generate and display architecture or sequence diagrams on GitHub in READMEs, issues, gists, etc using mermaid syntax.

Writing good cryptographic hash functions is hard! Here’s a tutorial inverting a hash function with good mixing but no fan out.

Mmap is not magic, and has downsides. Here’s a paper explaining its limitations particularly for databases.

Create neural network architecture diagrams in LaTeX with PlotNeuralNet.

It’s gnu parallel’s 20th birthday this month! Take the opportunity to say happy birthday to this extremely useful and very frustrating tool.

“Bash scripts don’t have to be unreadable morasses where we reject everything we know about writing maintainable code” has been a long-running theme of the random section of this newsletter - here’s a community bash style guide.

As has advocating for the under-appreciated utility of embedded databases. Consider SQLite, a curated sqlite extension set, and a set of sqlite utils.

And SAT solvers/constraint programing/SMT solvers. Here’s solving wordle puzzles using Z3, or the physical snake-cube puzzle with MiniZinc.

Use Linux but miss your old pizza-box NeXTStation? Use NEXTSPACE as your desktop environment.

Miss 80s-style BBSes and miss writing code in Forth? Have I got news for you. Maybe you could use this Forth interpreter written in Bash. Or this one which compiles(!) to bash.

A real time web view of your docker container logs - dozzle.

A visual and circuit-level simulation of the storied 6502 processor (Apple II, Atari 2600, Commodore 64, BBC Micro) in Javascript.

High-speed and stripped-down linker mold has reached 1.0.

If you write C++, you might not know that lambdas have evolved quite a bit since C++11, with default and template parameters, generalized capture, constexpr safeness, templated lambdas, variadic captures, and you can return lambdas from functions now.

Function pointers satisfying an abstract interface in Fortran, with fractal generation as an example.

A really promising-looking free course and online book on Biological Modelling by Philip Compeau, who was part of the unambiguously excellent bioinformatics algorithms coding tutorial project Rosalind and “Bioinformatics Algorithms: An Active-Learning Approach” book.

A quantum computing emulator written in SQL with a nice tutorial explanation of state vector representations and some basic gates.

A lisp interpreter implemented in Conway’s game of life.

Rwasa is a web server written in x86 assembler for some reason.

How graph algorithms come in to play in movie end credits.

I did the advent of code this year, and it has a genuinely lovely ad-hoc community built up around it.

That’s it…

And that’s it for another week. Let me know what you thought, or if you have anything you’d like to share about the newsletter or management. Just email me or reply to this newsletter if you get it in your inbox.

Have a great weekend, and good luck in the coming week with your research computing team,

Jonathan

About This Newsletter

Research computing - the intertwined streams of software development, systems, data management and analysis - is much more than technology. It’s teams, it’s communities, it’s product management - it’s people. It’s also one of the most important ways we can be supporting science, scholarship, and R&D today.

So research computing teams are too important to research to be managed poorly. But no one teaches us how to be effective managers and leaders in academia. We have an advantage, though - working in research collaborations have taught us the advanced management skills, but not the basics.

This newsletter focusses on providing new and experienced research computing and data managers the tools they need to be good managers without the stress, and to help their teams achieve great results and grow their careers.

Jobs Leading Research Computing Teams

This week’s new-listing highlights are below; the full listing of 138 jobs is, as ever, available on the job board.

Principal Scientist Manager - Microsoft, Richmond WA USA

We are the Gray Systems Lab (GSL), the applied research group for Microsoft Azure Data. We tackle research problems in the areas of databases, big data, cloud, systems-for-ML, and ML-for-systems. Our mission is to advance the Microsoft Azure Data organization, and we do so in three ways: (i) innovating within Microsoft products and services (ii) publishing in top-tier conferences and journals, and (iii) actively contributing to open-source projects. As a manager in GSL, you will have the opportunity to lead a team of researchers, research engineers, and data scientists to advance state-of-the-art in the areas of databases, systems, and machine learning. By design, GSL looks ahead of where the current product roadmap is, exploring how new workloads, hardware, industry trends, or research breakthroughs could affect our products. This creates a broad charter around innovation that provides lots of freedom in problem selection. As a manager in GSL, you have an important role in guiding the problem selection and coordinate the relationship between GSL and our partner product teams. Prior experience in performing research work or technical innovation in industrial settings will serve you well in this role.

Director of Data Science and Research - Innocence Project, New York NY USA

The Innocence Project was founded in 1992 by Barry C. Scheck and Peter J. Neufeld at the Benjamin N. Cardozo School of Law at Yeshiva University to assist the wrongly convicted who could be proven innocent through DNA testing. The Innocence Project’s groundbreaking use of DNA technology to free innocent people has provided irrefutable proof that wrongful convictions are not isolated or rare events but instead arise from systemic defects. The Director of Data Science and Research leads an exciting and innovative department at the Innocence Project that deepens our understanding of wrongful convictions, facilitates learning and informs criminal legal system reform efforts by mining the data of some 30 years of DNA exonerations, collecting and analyzing the academic and scientific literature on the causes and remedies of wrongful convictions, (including the limitations of various forensic sciences), reviewing internal case and client data, identifying relevant knowledge gaps, and developing strategies to address these gaps. The Director of Data Science and Research sets the tone for data culture in the organization, establishing internal protocols on data collection, setting the Innocence Project’s research strategy, and guiding and executing data research projects from inception to completion.

Senior Manager - Data Science - Aviva, Toronto ON CA

Join a leadership team of actuaries, data scientists and engineers at the forefront of leveraging data to drive decisions at every level of our organization. The insurance industry is undergoing a data-driven, technology revolution and you will be in the driver’s seat. Insurance pricing, underwriting, claims and other core insurance functions are all looking for actionable insights that can be coaxed out of our data. You and your team will work with these business areas to realize this value & further embed data science in our operations.

Senior Computer Scientist & Software Engineer (Scientist 4) - Los Alamos National Laboratory, Los Alamos NM USA

Expert-level knowledge and demonstrated experience in C/C++, C#, Objective C, Swift, Go, or Rust. Demonstrated experience in GPU programming. Demonstrated excellence in technical leadership with significant impact including initiating, organizing, and leading interactions with people from other internal and external organizations and programs to create collaborations.

Large-Scale Data Management - Senior Software Engineer - Oak Ridge National Laboratory, Oak Ridge TN USA

The Data Lifecycle and Scalable Workflows Group at Oak Ridge National Laboratory is seeking a senior software engineer focused on large-scale data management and storage. Architect and develop scalable data management systems to manage petabytes of data. Lead a team of research and technical professionals to develop new capabilities that execute on ORNL’s leading data and compute infrastructures. Principle Investigator / project manager for OLCF/ORNL public data portal, Constellation.

Web Modernization Lead, Program Specialist - NASA, Various USA

Chairs the NASA-wide web management board (WMB) responsible for assessing, streamlining, modernizing, and maintaining NASA’s digital presence and maximizing the effectiveness of digital communications. Leads the development and execution of an integrated web strategy that establishes critical requirements for the Agency’s public web presence and a sustainable governance structure to meet both current and future website needs. Develops and provides oversight and direction for short and long-term planning in setting the Agency’s web strategy.

Head of Bioinformatics IT - SIB Swiss Institute of Bioinformatics, Lausanne CH

Propose and implement (and continuously review) the IT department’s vision and strategy. Provide IT support and advice for a variety of life science projects of national and international importance, included projects involving the processing of sensitive human data for research purposes or public health surveillance. Organize and manage the IT operations including the help desk. Adopt technologies that are in line with the organization’s objectives and needs. Assess the quality and relevance of current infrastructures and make recommendations regarding improvements; manage the hardware and software inventories and their renewals; identify the need for upgrades, configurations or new systems.

Senior HPC Software Developer - General Atomics, San Diego CA USA

General Atomics (GA) has an exciting opportunity for an experienced high performance computing (HPC) Software Developer to support our Advanced Computing initiative within the Energy Group. This position leads the design, development and verification of novel scientific software projects for HPC (scalable to exascale) hardware, including some of the world’s most powerful supercomputers. This position optimizes, benchmarks and tests existing HPC software, including MHD plasma and CFD simulators. This position also involves researching and implementing new mathematical approaches in software, primarily in a research & development environment, in support of the larger goal to solve critical scientific questions using high performance computing.

Research Director, High Performance Computing - IDC, Remote US

IDC is seeking a Research Director to cover research on High Performance Computing (HPC) in its newly formed Performance Intensive Computing Practice within the Infrastructure Systems, Platforms and Technologies Group.

The qualified individual will be responsible for delivering high-quality quantitative and qualitative research projects (many of which are published to IDC’s document library), providing high levels of customer interactions and advisory services, and regularly executing on research deliverables. A successful candidate will become an important member within a team of industry experts and will be a frequent contributor to IDC’s infrastructure and cloud infrastructure services research.

Senior Manager, Data Science - Bell, Toronto ON or Montreal QC CA

As a Senior Manager on this team, you will support 5-7 Data Scientists and lead the development of statistical and machine learning models, working closely with machine learning engineers to launch these models at scale. You will work with exabytes of rich datasets to develop decision-making models that provide recommendations for our customers.

Researcher II - Software Developer for Transportation Data and Modeling Tools - National Renewable Energy Laboratory, Golden CO USA

From day one at NREL, you’ll connect with coworkers driven by the same mission to save the planet. By joining an organization that values a supportive, inclusive, and flexible work environment, you’ll have the opportunity to engage through our eight employee resource groups, numerous employee-driven clubs, and learning and professional development classes. NREL supports inclusive, diverse, and unbiased hiring practices that promote creativity and innovation. By collaborating with organizations that focus on diverse talent pools, reaching out to underrepresented demographics, and providing an inclusive application and interview process, our Talent Acquisition team aims to hear all voices equally. We strive to attract a highly diverse workforce and create a culture where every employee feels welcomed and respected and they can be their authentic selves.

Manager, IT Research Technology - UMass Medical School, Worchester MA USA

Under the general direction of the Associate Chief Information Officer or designee, the Manger, IT Research Technology is responsible for the planning, design, review, programming and implementation of institutional software and biomedical big data solutions at the University of Massachusetts Medical School for advancing research and translation of research findings to clinics. This includes designing, developing and implementing various solutions of capturing and integrating heterogeneous biomedical research data. In addition, he/she will serve as product owner, scientific consultant, and instructor to the Medical School faculty and staff. Perform diverse and complex duties in a manner consistent with a dynamic and active biomedical education and research community. This position is Hybrid

Director of Research Computing - UMass Amherst, Amherst MA USA

Reporting to the Chief Information Officer (CIO), the Director of Research Computing leads the design, planning, and implementation of research computing services. Primary responsibilities include leading and leveraging large-scale data and computational systems; supporting custom software, hardware, and workflows; and facilitating engagements between campus researchers and IT staff to provide sustainable support structures and business models for research IT needs. The Director of Research Computing fully understands research process, goals, and needs, and communicates across disciplines and technical fields to develop creative technical solutions that meet research goals and objectives.

Program Manager, Aquatic Informatics - Danaher, Vancouver BC CA or San Dimas CA USA

Aquatic Informatics (https://aquaticinformatics.com/) is a mission-driven software company that organizes the world’s water data to make it accessible and useful. We provide software solutions that address critical water data management, analytics, and compliance challenges for the rapidly growing water industry. Water monitoring agencies worldwide trust us to acquire, process, model, and publish water information in real time. We offer a full range of solutions, from standalone software packages for individual users, hosted software services, and enterprise-wide national systems. We serve over 1,000 municipal, federal, state/provincial, hydropower, mining, academic, and consulting organizations in over 60 countries that collect, manage, and process large volumes of water data.

Lead Scientist, Data Science - E11 Bio, Alameda CA USA

E11 Bio is on a mission to make single-cell brain circuit mapping a routine part of every neuroscientist’s toolbox. E11 Bio is a non-profit Convergent Research focused research organization located in Alameda, California, pursuing blue-skies neuroscience research yet operating like a nimble, tight-knit start-up. E11 Bio is seeking an experienced and motivated Lead Data Scientist to take a primary role developing the next generation of brain mapping technology. As an early team member at E11 Bio, you will establish and lead the E11 data science team, where you’ll design and implement innovative methods for analyzing 3D optical microscopy images of neural circuit architecture. Working closely with the cellular barcoding and molecular connectomics teams, you’ll design experiments, collect data, and analyze, interpret and present next-generation brain circuit mapping results consistent with our commitment to high impact and open science.

High Performance Computing Digital Facilitation Manager - Australian Government, Department of Defence, Edinburgh SA or Fishermans Bend VIC AU

A world-class High Performance Computing (HPC) Centre is being established by Defence Science and Technology Group (DSTG) to help solve complex real-world Defence problems and support innovative advanced Defence research. The HPC Digital Science Facilitation Manager is responsible for providing support across the Computational Data Intensive Sciences (CDIS) portfolio for facilitating the timely completion of activities and accelerating research outcomes. The role employs extensive knowledge of Science and Technology (S&T) principles to manage all incoming CDIS support requests, providing project management expertise to assist the team to deliver complex multi-disciplinary and innovative solutions for a wide range of Defence S&T and research activities. The successful applicant will work with a large degree of autonomy and apply extensive working knowledge of highly complex scientific computing environments and research workflows to effectively and efficiently manage access to resources performing research in support of divisional research projects.

Data Project Manager, Healthwatch England - UK Civil Service, remote UK

Work closely with the Head of Network Development and the cross- team working group(s) to ensure the aims of the Data and Digital strategy are implemented across England; making sure insight gathered by local Healthwatch can be collected and analysed by Healthwatch England, informs our ways of working and we bring our colleagues and external partners with us. Coordinate and lead the development and delivery of programme projects including data standards and taxonomy and data systems; working closely with programme teams, suppliers and

Director, Data & Analytics Architecture - Qlik, Ottawa ON CA

The Director, Data & Analytics Architecture’s main responsibility will be to identify, analyze and support the enterprise’s business drivers via development of enabling data/information strategies, in conjunction with technology leadership. The Director, Data & Analytics Architecture will lead the development and evolution of Qlik’s data policies and approaches with a goal of supporting data analytics and data science. A data governance practice and standards will be pragmatically defined and managed to derive optimum business value while ensuring data security and privacy requirements are fully met.

Senior Developer Relations Manager – Computational Chemistry - NVIDIA, remote US

This position is for the leader to grow the adoption of NVIDIA computational chemistry and materials capabilities while focusing where NVIDIA should invest in the future. This role will affect numerous industries and developer ecosystems including material science, pharmaceuticals, public sector, agriculture, and manufacturing. In this role you will be responsible for deeply engaging with open-source software developers and other software providers to promote the use of NVIDIA accelerated platforms for chemistry workflows. In addition, you will focus on promoting new methodologies like artificial intelligence along with traditional molecular dynamics and quantum chemistry approaches to produce capabilities of the future. There is a strong focus on end-to-end enhancement of workloads through NVIDIA solutions including advanced neural network architectures and software tools; and accelerated data transformations and flows. This role is highly visible within NVIDIA and for developers building on NVIDIA technologies.

Senior Research Software Engineer - San Diego Supercomputer Center, San Diego CA USA

The S3 group within SDSC will engage in 3 primary activities: i) examining SDSC software creation projects for business models for continued sustainability, and ii) operating and developing the HUBzero infrastructure which involves the creation and ongoing operation and development of the HUBzero software platform, and iii) leading the Science Gateways Community Institute which involves coordinating the efforts of 45 personnel around the United States as members of a distributed virtual organization funded by NSF that provide consulting services to the science gateway community. The Senior Research Software Engineer will apply advanced computational, computer science, data science, and CI software research and development principles, with relevant domain science knowledge where applicable, to perform highly complex research, technology and software development which involve in-depth evaluation of variable factors impacting medium to large projects of broad scope and complexities. The incumbent will design, develop, and optimize components / tools for major HPC / data science / CI projects, resolve complex research and technology development and integration issues and give technical presentations to associated research and technology groups and management.

Senior Data Science Manager - OVO, Bristol UK

The OVO Group’s purpose is to drive progress towards net zero carbon living. Reporting into the Head of Data Science for Retail, you will manage a team of data scientists and analysts developing innovative data products that deliver great business outcomes for our Retail teams. Your team will own the end to end development of machine learning models, covering prediction, propensity, segmentation and NLP (and more!). You’ll be responsible for engaging with different business teams to understand their data science needs and prioritising projects with your stakeholders. You’ll also support the development of your team through mentoring and achievable career progression plans.

Data & AI Senior Project Manager - AstraZeneca, Cambridge UK

The role is for a Senior Project Manager within R&D IT - an outstanding organization at the forefront of the technology revolution in healthcare aligned to the AI Engineering Platform Team. The ambition for this Platform is huge. We are looking for data, AI, technology and project management experts to join R&D IT that will ensure this ambition is met.You will be joining an agile and hardworking team of technologists who share excitement for the delivery of data and analytics solutions and their application in science to change the life of patients. Living and breathing technology is a key factor to enable us to keep pace with the life changing ideas of the scientists. As a Senior IT Project Manager aligned to the AI Engineering Platform Team, you will ensure delivery aligns to the vision and business strategy, and that true business value is delivered to the customer as a result. Having a strong partnership with the business customer driving the demand for the solution is critical to success in this role.

Senior Manager, Biostatistics - BeiGene, various US

Supporting IO clinical development with a focus on target therapies and solid tumors, the position will work with the clinical and regulatory team in designing clinical trials and developing the statistical analysis plan, facilitate the implementation of statistical analyses, provide statistical input to the CSR and scientific presentations/manuscripts, perform statistical functions for submission related activities. The position will participate in process improvement, training, SOP development, and mentoring junior statisticians as a senior member of biostatistics department.

Applied Science Manager, ML Solutions Lab - AWS, London UK

As a ML Solutions Lab Applied Science Manager, you will lead a team of customer-facing scientists and architects to design and deliver advanced ML solutions to solve diverse real-world problems for customers across all industries. You will interact with customers, translate their business problems into ML problems, and lead your team in applying classical ML algorithms and cutting-edge deep learning (DL) and reinforcement learning approaches to areas such as drug discovery, customer segmentation, fraud prevention, capacity planning, predictive maintenance, pricing optimization and event detection.

Deputy IT Director - Argonne National Laboratories, Lemont IL USA

The mission of Argonne’s Computing, Environment, and Life Sciences (CELS) directorate is to enable groundbreaking scientific and technical accomplishments in areas of critical importance to the 21st century. As a Leader of the CELS Systems team you would provide project leadership to the technical operation and support of CLS High Performance Computing and Experimental Computing infrastructure. Lends expertise as a specialist to develop resolutions to complex problems that require the frequent use of creativity. Also responsible for determining objectives of assignments, including responsibility for overall technical planning for projects and activities.

Associate Director of Research and High Performance Computing - Colgate University, Hamilton NY USA

The Associate Director of Research and High Performance Computing (HPC) provides strategic direction, architecture design, and support for research computing services at Colgate University that are directly aligned with the third century plan to support outstanding faculty through the faculty excellence in research initiative. This position is responsible for understanding research needs across campus through consultation with faculty, staff, and students. They will manage the installation, configuration, and maintenance of research servers and software ensuring optimal performance and uptime.

Senior Full-Stack Web Developer for Scientific Applications - Kitware, Clifton Park NY USA

Kitware’s Data and Analytics team helps internal and external customers deliver their next data and AI workflows with our expertise in emerging web and software infrastructure technologies. The Data and Analytics team fosters a collaborative atmosphere where we pride ourselves on the proactive exchange of ideas. Collaborate with our distributed research and development engineering team as well as external collaborators and customers to design and implement new features and fix bugs for our platforms and web applications. Contribute to the overall architecture design of web applications

Maintain scalability, code integrity, and usability of applications

Open Source Engineering Manager - RStudio, Remote USA

You will work hand in hand with team leads and be responsible for managing a small team of your own (mostly developers working on new initiatives that haven’t yet grown a team and team leads). You will coach and mentor your reports to become better software developers, helping work through technical challenges, build processes for repeated tasks, and encourage and support them in expanding their skillsets. You will play a key role in hiring; we expect you to care deeply about diversity, and be excited to develop initiatives that will expand the pool of skilled R users.

Project and Implementation Manager, Research Data Improvement Project - Queen Mary University of London, London UK

Queen Mary University of London is about to launch a major programme of work to improve the quality of data relating to its research and researchers. The objective is to improve the quality of real time information about our research activities to help researchers and the University as a whole to understand and improve performance. The programme of work will also make the process of preparing the submission for future Research Excellence Framework exercises less data-onerous.

Senior Manager - Data Scientist, Fraud - BMO, Toronto ON CA

The Financial Crimes Unit (FCU) brings together our Cybersecurity, Fraud, Physical Security and Resilience Planning capabilities to address the ever-growing and increasingly complex global security environment. It is a highly collaborative effort that greatly enhances BMO’s ability to rapidly prevent, detect, respond to, and recover from all security & crisis threats. This position offers a unique experience to learn from experienced leaders in the industry, join a team building the 21st century model for security and helping grow the good by protecting our customers and communities. As a senior member of the Fraud Analytics Solutions Team, you will be instrumental in exploring automation opportunities, industry best practices and will be accountable for continuously evaluating fraud prevention and detection algorithms and rules engines.

Managing Principal, Data Analytics Consulting - EPAM, Remote CA

EPAM Data Practice is looking for Managing Principal, Data and Analytics Consulting. Senior data professional, visionary, technologist and business executive who can help us to take our Data Consulting service in North America to the next level.

Sr. R&D Manager - Software Technologies - AMD, Markham ON CA

The Software Technologies Team are responsible for forward-looking research and development for AMD’s future products. Due to growth, we are seeking senior manager who will be lead a small team of high-caliber R&D engineers working on leading-edge technologies in AI, Machine Learning, Advanced Graphics, and Multimedia. You will work under the direction of software architecture leadership and be responsible for strategic technical deliverables for the company. You will oversee onsite and remote team members across global geographic regions. You will have well-established experience managing a technology R&D team in machine learning and/or 3D graphics, working in a fast-paced, multi-faceted collaborative environment. You will demonstrate a strong knowledge of technical program management and all phases of the SDLC, particularly in the areas of managing the Early Concept and Feasibility stages of a Software Engineering Project. You will also have experience with Windows, Linux and ChromeBook based systems and their respective device driver and firmware models.

Data Scientist, Data & Analytics Hub, Manager - PwC, Toronto ON CA

A career in our Data and Analytics Hub (“D&A Hub”) within PwC’s Internal Firm Services, will provide you with the opportunity to combine consulting and industry expertise with data science capabilities. We use descriptive and predictive analytical techniques to incorporate internal, third-party, and proprietary data to help answer questions and design solutions to our most pressing business issues. This is a newly formed team that will leverage data to rapidly discover, quantify, and deliver through intelligent analytics and scalable end-to-end business solutions. Working initially across the domains of Finance, Sales & Marketing, and Human Capital, the group will help drive analytics adoption across the Firm, accelerating value delivery, developing in-house talent, and building solutions and trust in data-driven decision making.

Product Director, Data Framework and Ops - GSL, Brentford UK

The mission of the Data Science and Data Engineering (DSDE) organization within GSK Pharmaceuticals R&D is to get the right data, to the right people, at the right time. The Data Framework and Ops team ensures we can do this efficiently, reliably, transparently, and at scale through the creation of a leading-edge, cloud-native data services framework. We focus heavily on developer experience, on strong, semantic abstractions for the data ecosystem, on professional operations and aggressive automation, and on transparency of operations and cost. The Product Director, Data Framework and Ops is a technical-minded individual contributor tasked with ensuring that the organization’s roadmap and OKRs/metrics are designed to best serve the need and have the highest impact.

Senior Manager, Data Architecture, Digital Analytics and Integration - CIBC, Toronto ON CA

Reporting to the Director, Digital Analytics and Integration you will be a key manager in the team responsible for creating, designing, communicating and maintaining the Data Architecture for all pertinent data sources and systems. You will lead efforts to collaborate with multiple cross-functional teams and data owners to identify key data sources and associated elements, design the interconnectivity and processing pipelines between data sources, manage the implementation of various data pipelines and serve as the owner for data ingestion architecture, design and implementation. You will serve as an SME for all pertinent data sources across the bank and will be responsible for bringing together numerous datasets, owned by teams across the bank, for all Digital initiatives.

Manager, Software Engineering - Freenome, San Francisco CA USA

Freenome is hiring a Manager, Engineering to build and manage a team building the next generation of Freenome’s engineering platform to combat cancer and other age-related diseases. You will build and manage a team of world-class Engineers focused on creating Freenome’s engineering platform. You will collaboratively lead the development of our platform and expand the talent base of your team while setting up Engineering and Freenome as a whole for future success.

Sr. Software Engineer - Distro - Anaconda, Remote USA

Anaconda is seeking a talented Sr. Software Engineer - Distro to join our rapidly-growing company. This is an excellent opportunity for you to leverage your skills and apply it to the world of data science and machine learning. Leading technical meetings and actively contributing ideas. Have mentored others in technical areas and/or coordinate with other members on their tasks that map to the team/department goals (i.e. set the direction).

Senior Programmer Analyst - Hospital for Sick Children, Toronto ON CA

The Centre for Computational Medicine (CCM) at the Hospital for Sick Children is looking for a Senior Programmer / Analyst for full-stack web development to support bioinformatics and large-scale genomic data analysis. We are especially interested in candidates with some DevOps experience. Located within the SickKids Research Institute, CCM is a core facility providing the scientific community with expertise in bioinformatics, high-performance computing (HPC), and machine learning. Genomics and health data volumes are expanding rapidly, and make entirely new types of health research and clinical decision-making possible. The CCM is currently developing several exciting projects to analyze diverse biomedical data. These projects involve creating scalable data-intensive infrastructure, pipelines and web portals for data analysis. The successful candidate will be collaborative, analytical, and have a passion for tackling challenges head on. Provide leadership in the form of project management (goal setting, scoping, architecture), code reviews, and mentoring junior developers and co-op students.